How to Get Product Data from Arrow.com?

Senior Web Scraping Engineer

Arrow.com is a leading global platform specializing in electronic components, technology solutions, and services. It caters to engineers, manufacturers, and businesses, providing a comprehensive catalog of semiconductors, connectors, and embedded solutions. With its vast inventory and technical resources, Arrow.com serves as a vital hub for sourcing, prototyping, and scaling technological projects.

Why is it important to scrape product data from Arrow?

Its data holds immense value for various stakeholders in the electronics and technology industries. So, why are they important? Let's figure them out now:

- Comprehensive Product Information: Arrow features detailed specifications for millions of components, helping engineers select the right parts for their projects.

- Price Monitoring: Access to pricing data enables businesses to compare costs and make informed purchasing decisions.

- Stock Availability Insights: Real-time updates on inventory levels help prevent supply chain disruptions.

- Market Trends and Analysis: Arrow data can reveal trends in demand for specific technologies, aiding market research and forecasting.

- Vendor and Supplier Evaluation: Businesses can evaluate suppliers based on product variety, price, and availability.

How to scrape Arrow using Puppeteer

- Step 1: Install Puppeteer via

npmto set up your project:

Bash

npm install puppeteer- Step 2: Initialize Puppeteer. Create a new JavaScript file (e.g.,

scrapeArrow.js) and include the following code to launch Puppeteer:

JavaScript

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

// Set a user agent to mimic a real browser

await page.setUserAgent(

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'

);

console.log('Browser launched successfully');

})();- Step 3: Navigate to Arrow.com. Add the code to open Arrow’s search page with a specific query:

JavaScript

const searchQuery = 'resistor'; // Replace with the term you want to search

const url = `https://www.arrow.com/en/products/search?q=${encodeURIComponent(searchQuery)}`;

await page.goto(url, { waitUntil: 'networkidle2' });

console.log(`Navigated to: ${url}`);- Step 4: Wait for Page Elements to Load. Ensure the page fully loads by waiting for the product elements to appear:

JavaScript

await page.waitForSelector('.product-card'); // Replace '.product-card' with the correct CSS selector

console.log('Product elements loaded successfully');- Step 5: Extract Product Data. Use Puppeteer’s

evaluatemethod to scrape product names, prices, and availability:

JavaScript

const products = await page.evaluate(() => {

return Array.from(document.querySelectorAll('.product-card')).map(item => {

const name = item.querySelector('.product-name')?.innerText || 'N/A';

const price = item.querySelector('.product-price')?.innerText || 'N/A';

const availability = item.querySelector('.availability-status')?.innerText || 'N/A';

return { name, price, availability };

});

});

console.log(products);- Step 6: Close the Browser. After scraping the data, close the browser to release resources:

JavaScript

await browser.close();

console.log('Browser closed successfully');- Final Script:

JavaScript

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({ headless: true });

const page = await browser.newPage();

await page.setUserAgent(

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36'

);

const searchQuery = 'resistor';

const url = `https://www.arrow.com/en/products/search?q=${encodeURIComponent(searchQuery)}`;

await page.goto(url, { waitUntil: 'networkidle2' });

console.log(`Navigated to: ${url}`);

await page.waitForSelector('.product-card');

const products = await page.evaluate(() => {

return Array.from(document.querySelectorAll('.product-card')).map(item => {

const name = item.querySelector('.product-name')?.innerText || 'N/A';

const price = item.querySelector('.product-price')?.innerText || 'N/A';

const availability = item.querySelector('.availability-status')?.innerText || 'N/A';

return { name, price, availability };

});

});

console.log(products);

await browser.close();

})();Puppeteer scraper is not enough! You will be required to bypass anti-bot detection like: browser fingerprinting, CAPTCHA, and rate limiting. Besides, most websites are deploying dynamic loading methods, which is difficult for your project to overcom.

Is there any more powerful and effective way to scrape Arrow product details?

Yes! Scraping API will provide you with a more stable scraping experience!

Scrapeless Arrow API - the Best Arrow Product Detail Scraper

Scrapeless API is an innovative solution designed to simplify the process of extracting data from websites. Our API is designed to navigate the most complex web environments and effectively manage dynamic content and JavaScript rendering.

Using our advanced Arrow Scraping API, you can access the product data you need without writing or maintaining complex scraping scripts. It only takes a few simple steps to easily access and scrape the information you need.

How could we deploy the Arrow API? Please follow these steps:

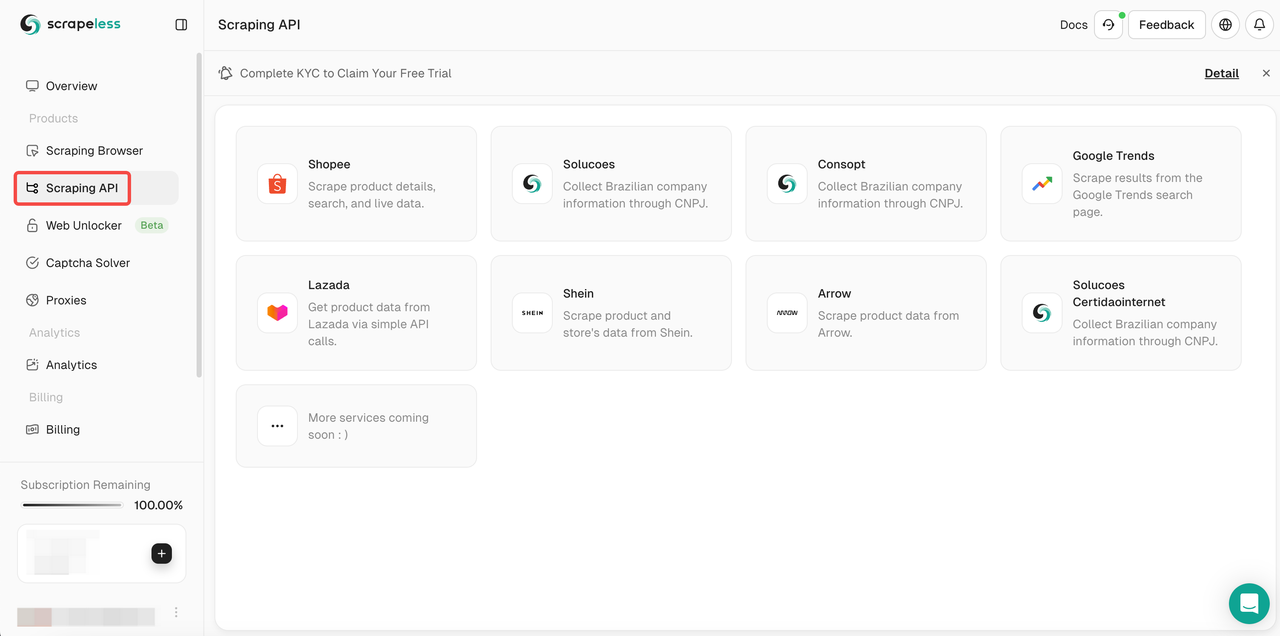

- Step 1. Log in to Scrapeless

- Step 2. Click the "Scraping API"

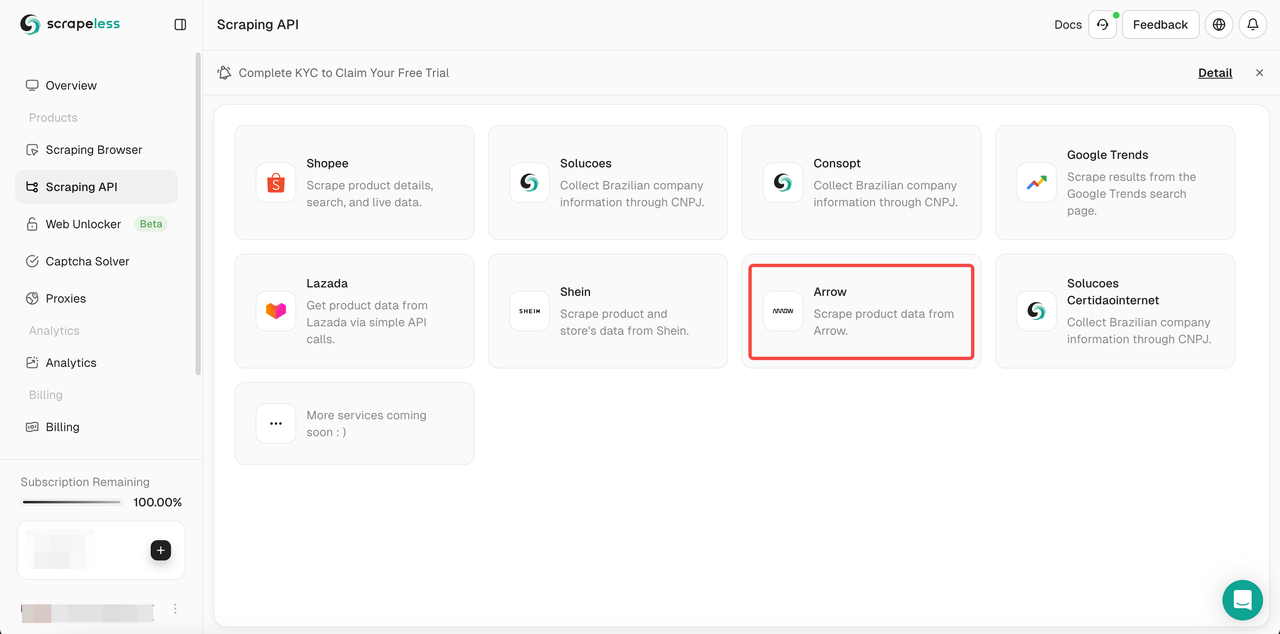

- Step 3. Click Arrow API and enter the edit page.

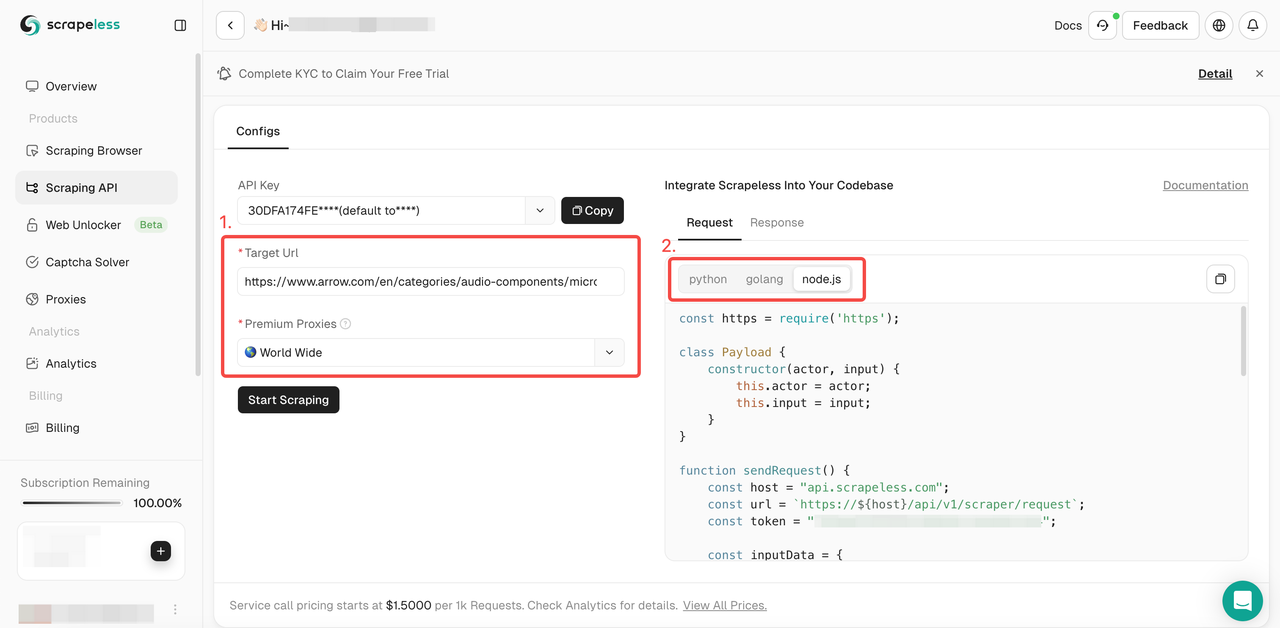

- Step 4. Paste the URL of the product page you need to crawl and configure the proxy target region. Here we take Arrow's "microphones" product page as an example, and we configure the proxy to be India. Then select the display language we need from

Python,Golang, andNodeJS. We will take Python as an example.

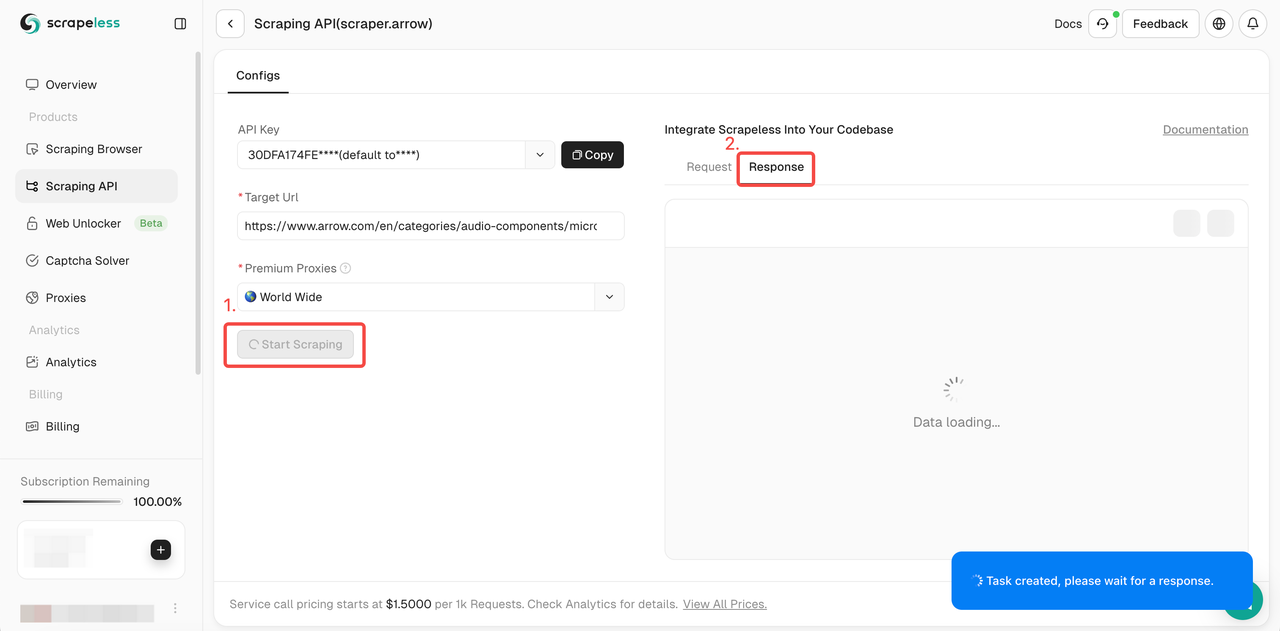

- Step 5. After configuration, please click the "Start Scraping", and you will get the response on the right:

- Scraping result:

Python

{

"data": "H4sIAAAAAAAA/6rm5VJQUEpJLElUslLIK83J0QELpBYV5RdBRXi5agEBAAD//0dMMnUmAAAA"

}Or you can deploy our sample codes to your own project:

Python

import requests

import json

url = "https://api.scrapeless.com/api/v1/scraper/request"

payload = json.dumps({

"actor": "scraper.arrow",

"input": {

"url": "https://www.arrow.com/en/products/search?page=3&cat=Capacitors"

}

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)Why Use a Scraping API for Arrow?

Arrow does not always provide public APIs to access all their data. However, Scrapeless Arrow scraping API can provide you with:

- Automation: Save time and reduce manual labor.

- Comprehensive Data: Obtain data that might not be available through official means.

- Customization: Tailor the extracted data to meet your specific needs.

- Integration: Use the data seamlessly in analytics platforms, CRMs, or inventory management tools.

Ending Thoughts

Products details in arrow.com is important to us for Price Monitoring, Inventory Management, Market Research, and Lead Generation. A powerful and comprehensive tool can easily bypass website detection and IP blocking.

With real browser fingerprint, IP rotation and advanced proxy, Scrapeless Arrow scraping API is your best choice to scrape Arrow and extract product data.

Sign in and get a special free trial now!

Further Reading

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.