HTTP Proxy Explained: How Does It Work?

Expert Network Defense Engineer

Explore the function, types, and benefits of HTTP proxies, and learn how they are essential for web scraping, security, and performance optimization.

An HTTP proxy is a proxy server specifically designed to handle requests and responses that use the Hypertext Transfer Protocol (HTTP) and its secure variant, HTTPS. It acts as a crucial intermediary between a client device (like your web browser or a web scraping script) and a web server, facilitating communication, enhancing security, and improving performance.

Understanding how HTTP proxies work is fundamental to modern web operations, from enterprise network security to large-scale data acquisition.

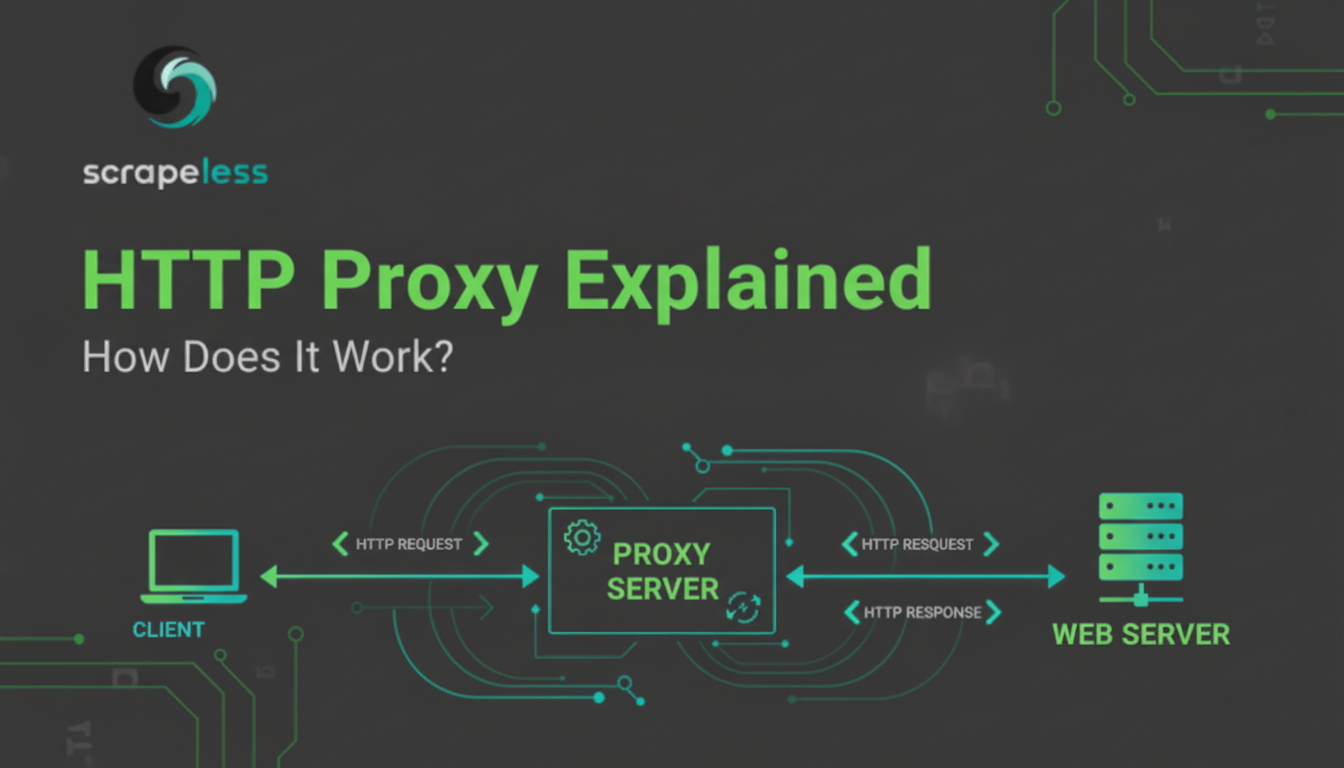

How Does an HTTP Proxy Work?

When a client is configured to use an HTTP proxy, the communication flow changes:

- Client Request: The client sends a request (e.g., a

GETrequest for a webpage) to the proxy server, not the final web server. - Proxy Interception: The HTTP proxy intercepts the request. It can then inspect, modify, or filter the request based on its configuration.

- Proxy Forwarding: The proxy forwards the request to the target web server on behalf of the client. Crucially, the target server sees the proxy's IP address, not the client's original IP.

- Response Handling: The web server sends the response back to the proxy.

- Client Delivery: The proxy receives the response and forwards it back to the client.

This process allows the proxy to serve as a critical checkpoint for content filtering, performance optimization (via caching), and maintaining anonymity and privacy [1].

Types of HTTP Proxies

HTTP proxies can be categorized based on their function and deployment:

| Proxy Type | Function | Primary Use Case |

|---|---|---|

| Forward Proxy | Sits between the client and the public internet, inspecting and routing outbound traffic. | Corporate networks for security and access control. |

| Reverse Proxy | Sits in front of one or more web servers, intercepting inbound client requests. | Load balancing, security (WAF), and SSL termination for web applications. |

| Transparent Proxy | Intercepts traffic without requiring client-side configuration; users are often unaware of its existence. | Network-level content filtering and monitoring. |

| High Anonymity Proxy | Conceals the user's IP address and prevents the target server from detecting the use of a proxy. | Web scraping and bypassing geo-restrictions. |

Benefits of Using an HTTP Proxy

The widespread adoption of HTTP proxies is driven by several key advantages:

1. Enhanced Security

HTTP proxies act as a security layer, inspecting and filtering traffic to block malicious content, malware, or phishing attempts. They can enforce security policies by restricting access to certain websites and can be configured to encrypt traffic, adding an extra layer of security for sensitive data transmission [2].

2. Improved Performance (Caching)

Proxies can significantly improve performance by caching frequently accessed web content. When a user requests a resource, the proxy checks its cache first. If the content is available and fresh, it is served directly from the cache, reducing load times and minimizing the need to contact the origin server. This is a core component of Content Delivery Networks (CDNs) [3].

3. Anonymity and Privacy

For web scraping and privacy-conscious browsing, an anonymous HTTP proxy is invaluable. By masking the user's real IP address, the proxy prevents websites from tracking the true origin of the request, enabling users to access content anonymously and circumvent geo-restrictions.

4. Load Balancing and Scalability

In distributed systems, HTTP proxies are used for load balancing, distributing incoming requests across multiple backend servers based on predefined algorithms. This improves resource utilization, reduces response times, and ensures high availability and fault tolerance for web applications.

HTTP Proxies in Web Scraping

For web scraping, the High Anonymity Forward Proxy is the most critical type. When scraping at scale, websites employ sophisticated anti-bot measures that detect and block requests coming from the same IP address.

By leveraging a rotating pool of high-quality HTTP proxies, a scraping solution can:

- Avoid IP Bans: Each request can be routed through a different IP address, making it appear as if the traffic is coming from numerous genuine users.

- Geo-Targeting: Proxies can be selected based on their geographic location, allowing scrapers to collect localized data (e.g., pricing, search results) from specific regions.

Recommended Solution: Scrapeless Proxies

For professional web scraping that requires a massive, reliable pool of high-anonymity HTTP/HTTPS proxies, Scrapeless Proxies offers a superior solution.

Scrapeless offers a worldwide proxy network that includes Residential, Static ISP, Datacenter, and IPv6 proxies, with access to over 90 million IPs and success rates of up to 99.98%. It supports a wide range of use cases — from web scraping and market research [4] to price monitoring, SEO tracking, ad verification, and brand protection — making it ideal for both business and professional data workflows.

Scrapeless Proxies: High-Anonymity and Performance

Scrapeless's Residential and Static ISP proxies are particularly well-suited for high-anonymity HTTP/HTTPS requests, offering:

- Automatic proxy rotation

- 99.98% average success rate

- Precise geo-targeting (country/city)

- Support for HTTP/HTTPS/SOCKS5 protocols

Scrapeless Proxies provides global coverage, transparency, and highly stable performance, making it a stronger and more trustworthy choice than other alternatives — especially for business-critical and professional data applications that require reliable universal scraping [5] and product solutions [6] via HTTP/HTTPS.

Conclusion

The HTTP proxy is a versatile and indispensable tool in the modern internet ecosystem. Whether for corporate security, content delivery, or high-volume web scraping, its role as an intermediary is critical. By choosing a high-quality provider like Scrapeless Proxies, you ensure that your HTTP-based operations benefit from the best in anonymity, speed, and reliability.

References

[1] IETF: Hypertext Transfer Protocol (HTTP/1.1): Message Syntax and Routing

[2] Cloudflare: What is a Proxy Server?

[3] Akamai: What is a CDN?

[4] W3C: HTTP/1.1 Method Definitions (GET)

[5] OWASP: Web Application Firewall (WAF)

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.