How to Enhance Crawl4AI with Scrapeless Cloud Browser

Expert Network Defense Engineer

In this tutorial, you’ll learn:

- What Crawl4AI is and what it offers for web scraping

- How to integrate Crawl4AI with the Scrapeless Browser

Let’s get started!

Part 1: What Is Crawl4AI?

Overview

Crawl4AI is an open-source web crawling and scraping tool designed to seamlessly integrate with Large Language Models (LLMs), AI Agents, and data pipelines. It enables high-speed, real-time data extraction while remaining flexible and easy to deploy.

Key features for AI-powered web scraping include:

- Built for LLMs: Generates structured Markdown optimized for Retrieval-Augmented Generation (RAG) and fine-tuning.

- Flexible browser control: Supports session management, proxy usage, and custom hooks.

- Heuristic intelligence: Uses smart algorithms to optimize data parsing.

- Fully open-source: No API key required; deployable via Docker and cloud platforms.

Learn more in the official documentation.

Use Cases

Crawl4AI is ideal for large-scale data extraction tasks such as market research, news aggregation, and e-commerce product collection. It can handle dynamic, JavaScript-heavy websites and serves as a reliable data source for AI agents and automated data pipelines.

Part 2: What Is Scrapeless Browser?

Scrapeless Browser is a cloud-based, serverless browser automation tool. It’s built on a deeply customized Chromium kernel, supported by globally distributed servers and proxy networks. This allows users to seamlessly run and manage numerous headless browser instances, making it easy to build AI applications and AI Agents that interact with the web at scale.

Part 3: Why Combine Scrapeless with Crawl4AI?

Crawl4AI excels at structured web data extraction and supports LLM-driven parsing and pattern-based scraping. However, it can still face challenges when dealing with advanced anti-bot mechanisms, such as:

- Local browsers being blocked by Cloudflare, AWS WAF, or reCAPTCHA

- Performance bottlenecks during large-scale concurrent crawling, with slow browser startup

- Complex debugging processes that make issue tracking difficult

Scrapeless Cloud Browser solves these pain points perfectly:

- One-click anti-bot bypass: Automatically handles reCAPTCHA, Cloudflare Turnstile/Challenge, AWS WAF, and more. Combined with Crawl4AI’s structured extraction power, it significantly boosts success rates.

- Unlimited concurrent scaling: Launch 50–1000+ browser instances per task within seconds, removing local crawling performance limits and maximizing Crawl4AI efficiency.

- 40%–80% cost reduction: Compared to similar cloud services, total costs drop to just 20%–60%. Pay-as-you-go pricing makes it affordable even for small-scale projects.

- Visual debugging tools: Use Session Replay and Live URL Monitoring to watch Crawl4AI tasks in real time, quickly identify failure causes, and reduce debugging overhead.

- Zero-cost integration: Natively compatible with Playwright (used by Crawl4AI), requiring only one line of code to connect Crawl4AI to the cloud — no code refactoring needed.

- Edge Node Service (ENS): Multiple global nodes deliver startup speed and stability 2–3× faster than other cloud browsers, accelerating Crawl4AI execution.

- Isolated environments & persistent sessions: Each Scrapeless profile runs in its own environment with persistent login and identity isolation, preventing session interference and improving large-scale stability.

- Flexible fingerprint management: Scrapeless can generate random browser fingerprints or use custom configurations, effectively reducing detection risks and improving Crawl4AI’s success rate.

Part 4: How to Use Scrapeless in Crawl4AI?

Scrapeless provides a cloud browser service that typically returns a CDP_URL. Crawl4AI can connect directly to the cloud browser using this URL, without needing to launch a browser locally.

The following example demonstrates how to seamlessly integrate Crawl4AI with the Scrapeless Cloud Browser for efficient scraping, while supporting automatic proxy rotation, custom fingerprints, and profile reuse.

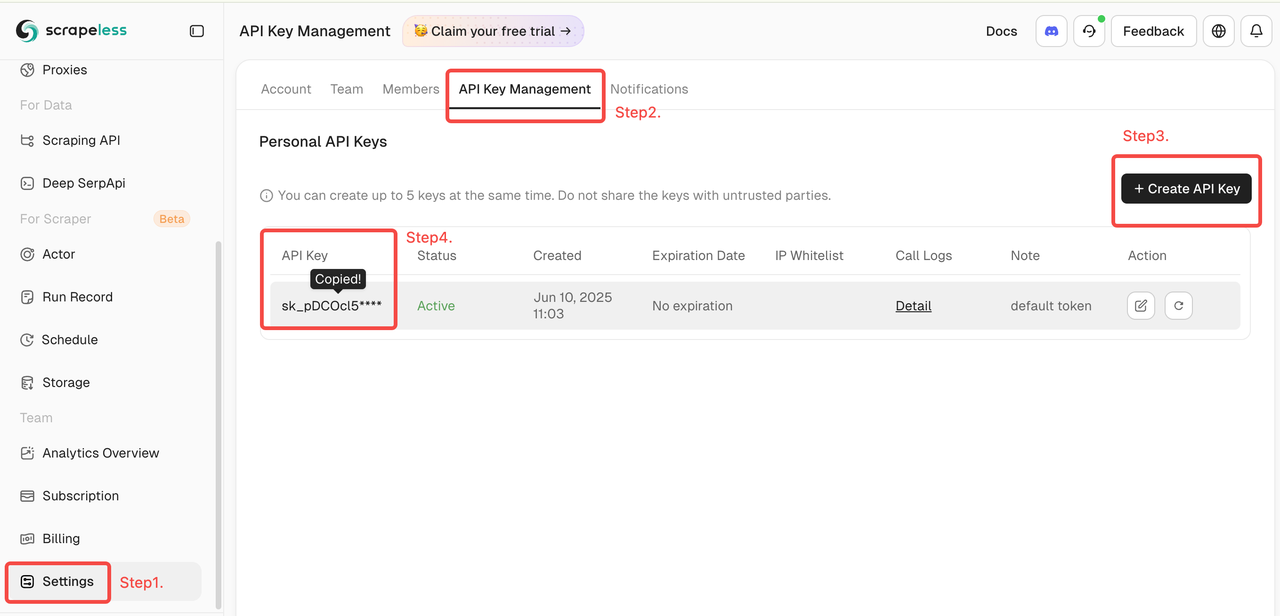

Obtain Your Scrapeless Token

Log in to Scrapeless and get your API Token.

1. Quick Start

The example below shows how to quickly and easily connect Crawl4AI to the Scrapeless Cloud Browser:

For more features and detailed instructions, see the introduction.

scrapeless_params = {

"token": "get your token from https://www.scrapeless.com",

"sessionName": "Scrapeless browser",

"sessionTTL": 1000,

}

query_string = urlencode(scrapeless_params)

scrapeless_connection_url = f"wss://browser.scrapeless.com/api/v2/browser?{query_string}"

AsyncWebCrawler(

config=BrowserConfig(

headless=False,

browser_mode="cdp",

cdp_url=scrapeless_connection_url

)

)After configuration, Crawl4AI connects to the Scrapeless Cloud Browser via CDP (Chrome DevTools Protocol) mode, enabling web scraping without a local browser environment. Users can further configure proxies, fingerprints, session reuse, and other features to meet the demands of high-concurrency and complex anti-bot scenarios.

2. Global Automatic Proxy Rotation

Scrapeless supports residential IPs across 195 countries. Users can configure the target region using proxycountry, enabling requests to be sent from specific locations. IPs are automatically rotated, effectively avoiding blocks.

import asyncio

from urllib.parse import urlencode

from crawl4ai import CrawlerRunConfig, BrowserConfig, AsyncWebCrawler

async def main():

scrapeless_params = {

"token": "your token",

"sessionTTL": 1000,

"sessionName": "Proxy Demo",

# Sets the target country/region for the proxy, sending requests via an IP address from that region. You can specify a country code (e.g., US for the United States, GB for the United Kingdom, ANY for any country). See country codes for all supported options.

"proxyCountry": "ANY",

}

query_string = urlencode(scrapeless_params)

scrapeless_connection_url = f"wss://browser.scrapeless.com/api/v2/browser?{query_string}"

async with AsyncWebCrawler(

config=BrowserConfig(

headless=False,

browser_mode="cdp",

cdp_url=scrapeless_connection_url,

)

) as crawler:

result = await crawler.arun(

url="https://www.scrapeless.com/en",

config=CrawlerRunConfig(

wait_for="css:.content",

scan_full_page=True,

),

)

print("-" * 20)

print(f'Status Code: {result.status_code}')

print("-" * 20)

print(f'Title: {result.metadata["title"]}')

print(f'Description: {result.metadata["description"]}')

print("-" * 20)

asyncio.run(main())3. Custom Browser Fingerprints

To mimic real user behavior, Scrapeless supports randomly generated browser fingerprints and also allows custom fingerprint parameters. This effectively reduces the risk of being detected by target websites.

import json

import asyncio

from urllib.parse import quote, urlencode

from crawl4ai import CrawlerRunConfig, BrowserConfig, AsyncWebCrawler

async def main():

# customize browser fingerprint

fingerprint = {

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/134.1.2.3 Safari/537.36",

"platform": "Windows",

"screen": {

"width": 1280, "height": 1024

},

"localization": {

"languages": ["zh-HK", "en-US", "en"], "timezone": "Asia/Hong_Kong",

}

}

fingerprint_json = json.dumps(fingerprint)

encoded_fingerprint = quote(fingerprint_json)

scrapeless_params = {

"token": "your token",

"sessionTTL": 1000,

"sessionName": "Fingerprint Demo",

"fingerprint": encoded_fingerprint,

}

query_string = urlencode(scrapeless_params)

scrapeless_connection_url = f"wss://browser.scrapeless.com/api/v2/browser?{query_string}"

async with AsyncWebCrawler(

config=BrowserConfig(

headless=False,

browser_mode="cdp",

cdp_url=scrapeless_connection_url,

)

) as crawler:

result = await crawler.arun(

url="https://www.scrapeless.com/en",

config=CrawlerRunConfig(

wait_for="css:.content",

scan_full_page=True,

),

)

print("-" * 20)

print(f'Status Code: {result.status_code}')

print("-" * 20)

print(f'Title: {result.metadata["title"]}')

print(f'Description: {result.metadata["description"]}')

print("-" * 20)

asyncio.run(main())4. Profile Reuse

Scrapeless assigns each profile its own independent browser environment, enabling persistent logins and identity isolation. Users can simply provide the profileId to reuse a previous session.

import asyncio

from urllib.parse import urlencode

from crawl4ai import CrawlerRunConfig, BrowserConfig, AsyncWebCrawler

async def main():

scrapeless_params = {

"token": "your token",

"sessionTTL": 1000,

"sessionName": "Profile Demo",

"profileId": "your profileId", # create profile on scrapeless

}

query_string = urlencode(scrapeless_params)

scrapeless_connection_url = f"wss://browser.scrapeless.com/api/v2/browser?{query_string}"

async with AsyncWebCrawler(

config=BrowserConfig(

headless=False,

browser_mode="cdp",

cdp_url=scrapeless_connection_url,

)

) as crawler:

result = await crawler.arun(

url="https://www.scrapeless.com",

config=CrawlerRunConfig(

wait_for="css:.content",

scan_full_page=True,

),

)

print("-" * 20)

print(f'Status Code: {result.status_code}')

print("-" * 20)

print(f'Title: {result.metadata["title"]}')

print(f'Description: {result.metadata["description"]}')

print("-" * 20)

asyncio.run(main())Video

FAQ

Q: How can I record and view the browser execution process?

A: Simply set the sessionRecording parameter to "true". The entire browser execution will be automatically recorded. After the session ends, you can replay and review the full activity in the Session History list, including clicks, scrolling, page loads, and other details. The default value is "false".

scrapeless_params = {

# ...

"sessionRecording": "true",

}Q: How do I use random fingerprints?

A: The Scrapeless Browser service automatically generates a random browser fingerprint for each session. Users can also set a custom fingerprint using the fingerprint field.

Q: How do I set a custom proxy?

A: Our built-in proxy network supports 195 countries/regions. If users want to use their own proxy, the proxyURL parameter can be used to specify the proxy URL, for example: http://user:pass@ip:port.

(Note: Custom proxy functionality is currently available only for Enterprise and Enterprise Plus subscribers.)

scrapeless_params = {

# ...

"proxyURL": "proxyURL",

}Summary

Combining the Scrapeless Cloud Browser with Crawl4AI provides developers with a stable and scalable web scraping environment:

- No need to install or maintain local Chrome instances; all tasks run directly in the cloud.

- Reduces the risk of blocks and CAPTCHA interruptions, as each session is isolated and supports random or custom fingerprints.

- Improves debugging and reproducibility, with support for automatic session recording and playback.

- Supports automatic proxy rotation across 195 countries/regions.

- Utilizes global Edge Node Service, delivering faster startup speeds than other similar services.

This collaboration marks an important milestone for Scrapeless and Crawl4AI in the web data scraping space. Moving forward, Scrapeless will focus on cloud browser technology, providing enterprise clients with efficient, scalable data extraction, automation, and AI agent infrastructure support. Leveraging its powerful cloud capabilities, Scrapeless will continue to offer customized and scenario-based solutions for industries such as finance, retail, e-commerce, SEO, and marketing, helping businesses achieve true automated growth in the era of data intelligence.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.