Top 6 SERP API for Search Engine Scraping | Ultimate List 2025

Specialist in Anti-Bot Strategies

6 Best SERP Data APIs 2025

When choosing and testing different SERP scraping APIs, the following test directions can help evaluate the performance of each API:

- Crawl speed and response time.

- Anti-blocking test.

- Data consistency test.

- API error and handling capabilities.

- User load and concurrent processing.

Top 1. Scrapeless

Why is Scrapeless the best scrape SERP tool? Yes, there is a little bias. However, Scrapeless is more credible with strength.

Scrapeless supports the free version. Just join our Discord and enjoy it! Scrapeless serp API is an innovative solution designed to simplify the process of extracting data from search engines. With our advanced Scraping API, you can access the data you need without writing or maintaining complex crawling scripts. Simple API calls give you immediate access to valuable information.

- Pros & Cons

| Pros | Cons |

|---|---|

| Scrapeless has strong anti-blocking and anti-fingerprinting capabilities. | Short time to market |

| It provides high concurrent crawling capabilities and can intelligently adjust request frequency and IP usage frequency | |

| The cost of each API call starts from $0.001, and drops to less than $0.0008 for higher amounts of data |

Scrapeless supports crawling many major engines such as Google, Bing, Yahoo, etc. And supports exclusive services, just tell us your needs.

- Use cases

Scrapeless is very suitable for users who need to crawl data on difficult and strict anti-crawler websites, especially those tasks that need to bypass search engine blocking. It is an ideal choice for all SEO companies, market research agencies, and users who need to obtain a large amount of competitor data.

Searching for a comprehensive scraping API tool?

Use Scrapeless to enjoy a seamless scraping experience.

Have a test now!

Top 2. ZenSerp

ZenSerp is a service that provides various APIs designed to collect data from different search engines. Using this API is very simple, and there is also a Playground on the website that allows you to configure and run API requests directly on your website. You can also view the code of the generated requests, but sometimes there are errors when generating them.

- Pros & Cons

| Pros | Cons |

|---|---|

| Provides an API to access search results | Free trial is limited compared to other services and may not provide enough data for comprehensive testing |

| Pricing plans available starting at $49.99 | The generated code may contain errors that may make it difficult or impossible to use, especially for beginners |

| Fast, with an average response time of 4.73 seconds |

- Use cases

This scrape SERP tool is suitable for SEO analysis, ranking monitoring and large-scale crawling tasks. Developers and medium-sized enterprises will like to use it.

Top 3. SerpWow

SerpWow is a simple and easy-to-use SERP scraping API that focuses on scraping high-precision search engine result data in real time, providing stable performance and powerful scraping capabilities. Overall, using this SERP API is similar to most other APIs. In addition, the website provides clear documentation and examples.

- Pros & Cons

| Pros | Cons |

|---|---|

| The trial allows 100 requests, providing an opportunity to test the service before committing to a paid plan. | Its JSON response contains heterogeneous, redundant, unstructured data. |

| Its lowest tariff plan starts at $25 per 1,000 requests. | The average response time is 12.08 seconds, which is medium among the services considered. |

- Use cases

It is suitable for SEO monitoring and keyword ranking analysis that require low latency and high frequency crawling. Small and medium-sized enterprises or SEO developers may choose it.

Top 4. DataForSEO

DataForSEO SERP Scraper API fetches real-time SERP data, both organic and paid search results, in JSON format. Included is an API for scraping data from Google SERPs.

The SERP API of this scrape serp tool is very convenient. It is not provided through an API key, but through a key generated based on a login and password.

- Pros & Cons

Unfortunately, it includes a lot of unnecessary information. Let's consider the main pros and cons of this service:

| Pros | Cons |

|---|---|

| 500 request trial available | Average response time is 6.75 seconds |

| The JSON response format is convenient | JSON response lacks result segmentation |

| Comprehensive data retrieval | Non-corporate access requires contacting support |

- Use cases

Applicable to data that requires high-precision, large-scale SEO crawling. It is suitable for large enterprises and SEO companies, especially those market research and SEO optimization tasks that require big data support.

Top 5. HasData

HasData is a web scraping and automation platform that provides tools and infrastructure to simplify data extraction, web automation, and data processing tasks. It provides a variety of extraction APIs, including the Google SERP API. However, testing shows that this API is also one of the slowest APIs.

- Pros & Cons

| Pros | Cons |

|---|---|

| Lower prices (starting at $29) | No support for some less common programming languages |

| Responses in JSON format with links to page screenshots | No built-in analysis or reporting tools |

| 30-day free trial, 200 Google SERP requests allowed | The documentation is cumbersome and lacks clear examples of raw API usage, and often relies on its libraries |

- Use cases

This SERP API is suitable for small and medium-sized enterprises and developers to carry out medium-scale search engine data scraping, especially those who need flexible customization of scraping tasks.

Top 6. Scrape-IT

Scrape IT is an API focused on simple and efficient web data crawling. It is suitable for users who do not need complex functions and pursue high-efficiency crawling.

- Pros & Cons

| Pros | Cons |

|---|---|

| Documentation is very clear and contains code snippets in almost all languages | API will experience delayed responses when making multiple requests at the same time |

| Average response time 5 seconds | |

| Supports Google and Bing, suitable for mainstream SEO crawling tasks |

- Use cases

Scrape IT has obvious advantages in JavaScript rendering and anti-blocking mechanisms, especially suitable for users who have high requirements for crawling dynamic content.

Overall verdict

| Attributes | Speed | Scalability | Documentation | Stability | Pricing | Verdict |

|---|---|---|---|---|---|---|

| Scrapeless | 4.6 | 4.7 | 4.7 | 4.8 | 4.8 | 🌟🌟🌟🌟🌟 |

| ZenSerp | 4.8 | 4.8 | 4.6 | 4.7 | 4.6 | 🌟🌟🌟🌟 |

| SerpWow | 4.0 | 4.7 | 4.5 | 4.7 | 4.5 | 🌟🌟🌟🌟 |

| DataForSEO | 4.3 | 4.6 | 4.6 | 4.8 | 4.7 | 🌟🌟🌟 |

| HasData | 4.0 | 4.5 | 4.7 | 4.7 | 4.8 | 🌟🌟🌟 |

| Scrape It | 4.3 | 4.5 | 4.5 | 4.4 | 4.7 | 🌟🌟🌟 |

The Best SERP API tool - Scrapeless: Using Steps

We are always struggling to access accurate and reliable Google SERP data is essential. That's where Scrapeless SERP API comes in—a powerful, affordable, and highly efficient tool designed to streamline your data extraction efforts.

You'll definitely be surprised by our competitive price! How much will it cost for each 1K URLs? Only $1 (subscribe for more discounts)!

Why should we choose Scrapeless SERP API?

Scrapeless is purpose-built to handle the challenges of scraping Google's search engine results pages (SERPs). With advanced anti-detection mechanisms, high-speed performance, and an incredibly high success rate, Scrapeless ensures your data collection runs smoothly without interruptions or bans.

Whether you’re tracking keyword rankings, monitoring competitors, or gathering market insights, Scrapeless delivers consistently accurate results.

- Cost-Effective: Scrapeless is designed to offer exceptional value.

- Consistent Performance: With a proven track record, Scrapeless provides steady API responses, even under high workloads.

- Impressive Success Rate: Say goodbye to failed extractions and Scrapeless promises 99.99% successful access to Google SERP data.

- Scalable Solution: Handle thousands of queries effortlessly, thanks to the robust infrastructure behind Scrapeless.

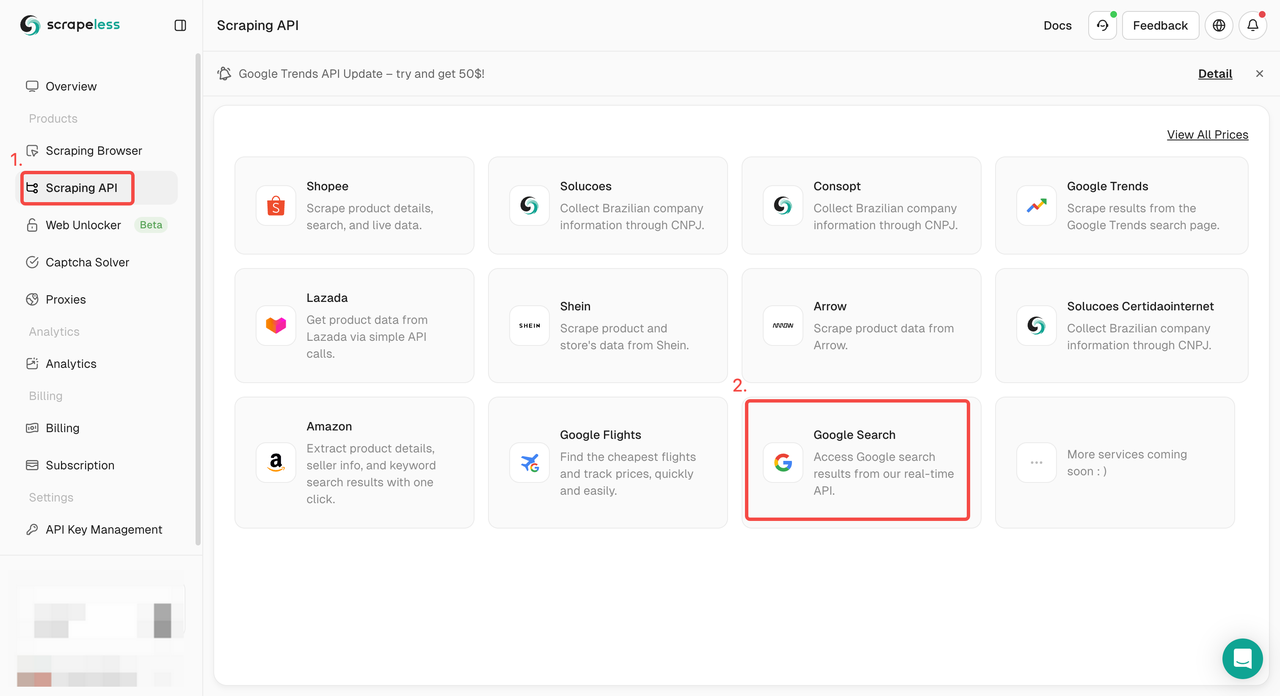

How to use Scrapeless Google Search API?

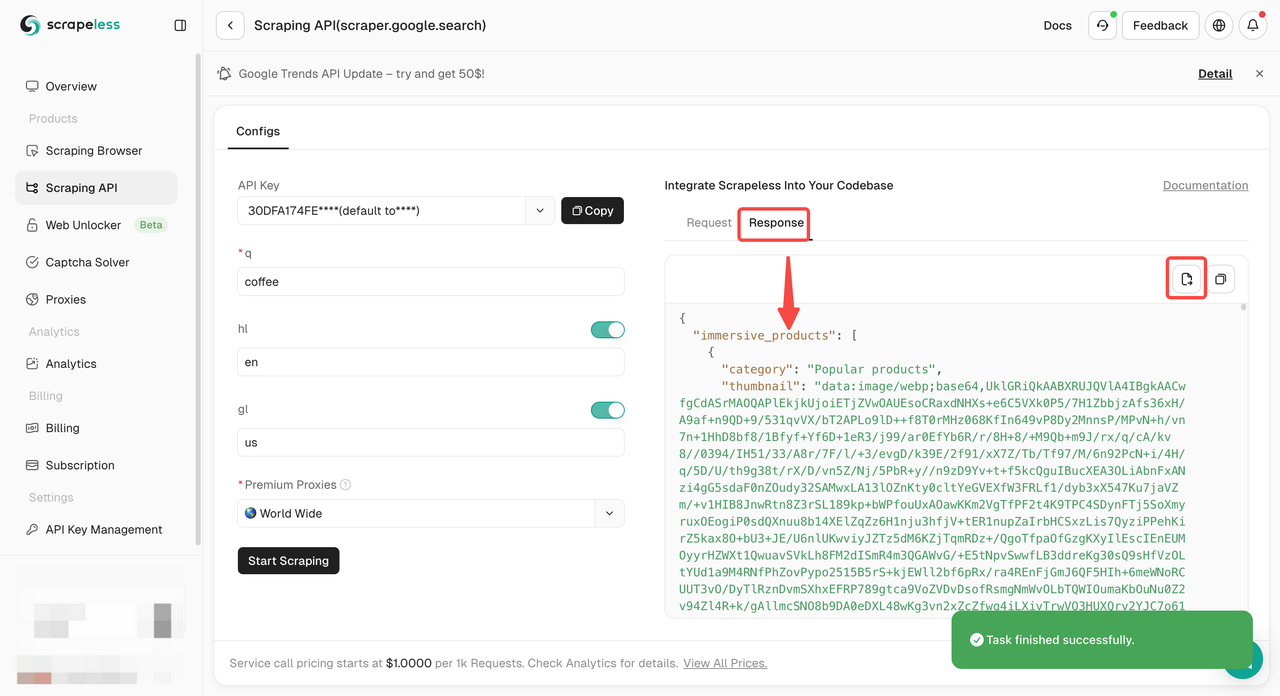

Step 1. Log into the Scrapeless Dashboard and go to "Google Search API".

Step 2. Configure the keywords, region, language, proxy and other information you need on the left. After making sure everything is OK, click "Start Scraping".

q: Parameter defines the query you want to search for.gl: Parameter defines the country to use for Google search.hl: Parameter defines the language to use for Google search.

Step 3. Get the crawling results and export them.

Just need sample code to integrate into your project? We’ve got you covered! Or you can visit our API documentation for any language you need.

- Python:

Python

import http.client

import json

conn = http.client.HTTPSConnection("api.scrapeless.com")

payload = json.dumps({

"actor": "scraper.google.search",

"input": {

"q": "coffee",

"hl": "en",

"gl": "us"

}

})

headers = {

'Content-Type': 'application/json'

}

conn.request("POST", "/api/v1/scraper/request", payload, headers)

res = conn.getresponse()

data = res.read()

print(data.decode("utf-8"))- Golang:

Go

package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.scrapeless.com/api/v1/scraper/request"

method := "POST"

payload := strings.NewReader(`{

"actor": "scraper.google.search",

"input": {

"q": "coffee",

"hl": "en",

"gl": "us"

}

}`)

client := &http.Client {

}

req, err := http.NewRequest(method, url, payload)

if err != nil {

fmt.Println(err)

return

}

req.Header.Add("Content-Type", "application/json")

res, err := client.Do(req)

if err != nil {

fmt.Println(err)

return

}

defer res.Body.Close()

body, err := ioutil.ReadAll(res.Body)

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(body))

}What is Search Engine Scraping?

Search Engine Scraping (also known as SERP scraping) refers to the acquisition of data on the search engine results page (SERP) through automated means. This usually includes titles, descriptions, URLs, rankings, paid ads, images, videos, and other types of search results returned by search engines. Scraping this data helps:

- Monitor competitors' SEO strategies

- Analyze keyword rankings and search trends

- Conduct market research and user behavior analysis

- Provide search engine optimization (SEO) suggestions

To avoid being blocked or anti-detected by search engines, it is usually necessary to use technologies such as proxy pools, IP rotation, and browser fingerprint simulation.

Scrapeless integrates with rotate proxy and IP services.

Get this special SERP data API now!

Types of data extracted by SERP API

SERP API focuses on crawling and providing high-quality search result data from search engines. It mainly extracts the following types of data to help users conduct in-depth SEO analysis and ranking monitoring:

- Search result ranking data

Serp data API provides specific ranking positions of keywords in search engine results, including organic rankings (natural search results) and paid advertising (Google Ads) positions.

- Web page title and description

Crawling the title and description of each search result is very important for SEO optimization and helps analyze the page structure and keyword density in the search results.

- URL address

Providing the URL of each search result page to help users analyze the SEO performance of the ranking page and whether it is a competitor page.

- Search engine features

Serp API can extract data related to search engine features, such as "Featured Snippets", pictures, videos, news clips, etc. This data is crucial for SEO strategy and content optimization.

- SERP analysis data

Including SERP features, search intent analysis, search ads, image/video search results, etc., to help users fully understand the search engine performance of a certain keyword.

- Geo-Targeting data

Serp API also supports crawling data by specific geographic location, which can obtain search engine ranking data in different regions and language environments, which is very suitable for global or localized SEO analysis.

- Search engine advertising data

Providing search engine advertising rankings, displayed advertising copy, advertiser information, etc., to help users understand the effectiveness of paid advertising.

Wrapping Up

These 6 SERP APIs in this blog will help you with different conditions:

- BrightData and Oxylabs: work well in large-scale and high-demand tasks.

- Apify: best for small and flexible scraping.

- Serp API: is a wonderful tool for SEO data scraping.

Scrapeless is your best SERP data API choice! It is suitable for advanced users who need to bypass complex anti-crawler mechanisms, especially for scraping tasks facing extremely high anti-crawler pressure.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.