GEO vs SEO: The Fundamental Shift in Search Engine Optimization in 2026

Expert Network Defense Engineer

Preface: The Paradigm Shift from “Blue Links” to “Answer-First”

In the fields of digital marketing and content creation, Search Engine Optimization (SEO) has long been the cornerstone of traffic acquisition. However, with the rapid advancement of artificial intelligence, we are now standing at a historic turning point—the fundamental logic of search engines is being disrupted by Generative Engines (GEs).

This transformation is not merely a technological upgrade; it represents a fundamental reshaping of the rules that govern content visibility.

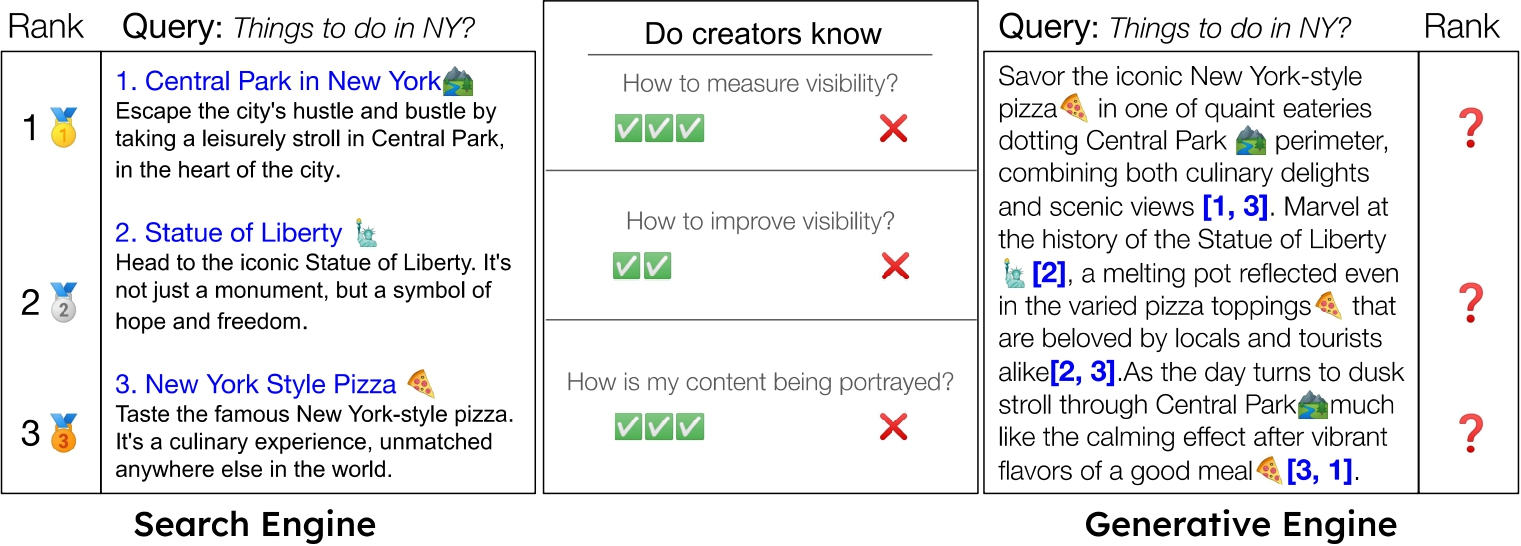

Traditional SEO aims to secure a spot among the top “blue links” on Search Engine Results Pages (SERPs) by optimizing keywords, improving page quality, and accumulating backlinks. At its core, this model revolves around ranking—a clear, trackable, and competitive position.

Today, however, generative engines powered by large language models (LLMs), such as Google AI Overviews or Perplexity, are redefining the search experience. Search is shifting from a “list of links” to direct answers. Users no longer need to click through multiple websites to evaluate information; instead, they receive AI-generated summaries that synthesize, integrate, and cite multiple sources.

As a result, the traditional SEO objective of “ranking higher” is becoming fundamentally outdated.

According to research from institutions such as Princeton University and the Indian Institutes of Technology, generative engines present unprecedented challenges for content creators:

“The emergence of large language models (LLMs) has given rise to a new paradigm of search engines that leverage generative models to collect and synthesize information in order to answer user queries. These generative engines (GEs), capable of producing accurate and personalized answers, are rapidly replacing traditional search engines and improving user experience.”

To address this shift, a new optimization paradigm has emerged: Generative Engine Optimization (GEO).

Rather than focusing on traditional rankings, GEO aims to increase the likelihood that content is cited or mentioned within AI-generated answers.

This is not simply an evolution of SEO—it is a fundamental rewrite of the rules of search optimization itself.

Understanding the New Visibility Framework

Before diving into GEO strategies, we must first address a fundamental question:

How is “visibility” measured in generative engines?

Visibility in Traditional SEO: Ranking Position

In the SEO era, visibility was easy to define. A website’s visibility was determined by its ranking position:

Rank #1 was the most visible, rank #10 somewhat visible, and rank #11 effectively invisible.

This model had clear advantages—it was intuitive, easy to quantify, and simple to compare.

However, it relied on a core assumption: that users would click through search results one by one. In reality, even a #1 ranking has no value if no one clicks, while a #10 ranking can still be successful if it attracts significant user attention. Despite this complexity, SEO traditionally simplified visibility into a single metric: ranking.

Visibility in GEO: A Multi-Dimensional Evaluation

Generative engines break this simplistic model.

In AI-generated answers, there is no concept of ranking. A single response may cite five different sources simultaneously. All of them are “visible,” but to varying degrees.

Research from Princeton University and the Indian Institutes of Technology proposes three key visibility metrics to more comprehensively evaluate how content performs within generative engines:

1. Word Count Impact

This is the most basic metric:

How much of the AI-generated answer is derived from your content?

For example, if an AI response is 500 words long and 100 words are sourced from your content, your word count impact is 20%.

This metric reflects a simple reality: the more content that is cited, the more information users actually see from you. A single quoted sentence has limited impact, whereas an entire paragraph significantly increases user exposure and perceived value.

2. Position-Weighted Word Count

This metric accounts for a well-established psychological principle: user attention decreases from top to bottom.

Content that appears at the beginning of an AI-generated answer is far more likely to be noticed than content buried at the end. The position-weighted word count assigns higher weights to citations appearing earlier in the response and lower weights to those appearing later.

As a result, a 200-word citation at the top of an answer is substantially more valuable than a 200-word citation at the bottom.

This metric is critical because it reflects real user behavior. Even if your content is cited extensively, it may have little practical impact if it consistently appears at the end of the response—where users may never reach it.

3. Subjective Display Impact

This is the most complex, yet most meaningful metric. It evaluates the quality and influence of a citation across seven dimensions:

- Relevance: How closely the cited content matches the user query

- Impact: Whether the citation contributes a core insight or merely a supporting detail

- Uniqueness: Whether the information is exclusive to this source or widely duplicated

- Authority: Whether the cited source is perceived as credible and trustworthy

- Completeness: Whether the information is presented fully or partially truncated

- Contextual Appropriateness: Whether the citation is placed within a suitable explanatory context

- Brand Presence: Whether the brand name is clearly associated with the cited content

This metric captures a crucial truth: not all citations are equal.

A citation from an authoritative source, highly relevant to the query, positioned prominently, clearly attributed, and explicitly branded is vastly more valuable than a vague, unattributed reference embedded deep within the response.

From Metrics to Strategy

The existence of these three metrics directly points to the strategic direction of GEO.

It is no longer sufficient to be cited. Content must:

- Occupy a larger proportion of the generated answer (Word Count Impact)

- Appear in highly visible positions (Position-Weighted Word Count)

- Be presented in a way that maximizes influence and attribution (Subjective Display Impact)

This also explains why only around 30% of brands remain visible across two consecutive AI-generated answers. Visibility in generative engines is inherently multi-dimensional, and changes in any single dimension can significantly affect overall visibility.

Core Principles of GEO: Three New Visibility Signals

Now that we understand how GEO measures visibility, we can examine the factors that determine performance across these metrics.

Signal 1: Freshness Is the Foundation of Trust

In the era of AI search, content freshness has become a core trust signal—not merely for ranking algorithms, but for the LLMs themselves, which must ensure informational accuracy.

When AI models generate answers—especially for commercial or comparative queries—they prioritize fresh and up-to-date content. Outdated information can easily lead to incorrect recommendations. For example, if a SaaS tool has changed its pricing or discontinued a feature, but an AI model cites content from three months ago, the user receives an inaccurate answer. At scale, this is fatal to the credibility of an AI system.

The data makes this clear:

- Pages without quarterly updates are three times less likely to be cited by AI than recently updated pages

- Over 70% of AI-cited pages have been updated within the past 12 months

- For commercial queries, freshness requirements are even stricter—83% of commercial citations come from pages updated within the last year

- In fast-moving industries such as SaaS, finance, and news, the effective content update window may shrink to three months or less

This means content maintenance is no longer an optional optimization—it is a baseline requirement for GEO. Brands must establish disciplined content update workflows and treat maintenance as a continuous, non-negotiable process.

Signal 2: Structured Content Is the Language of LLMs

AI models do not interpret ambiguous or loosely structured content the way humans do. They rely on clear structural signals to quickly and accurately understand a page. Structured content provides these signals, making information easier for LLMs to parse, extract, and cite.

The Power of Heading Hierarchy

Clear, sequential heading structures (a single H1 followed by H2 and H3) are critical. Research shows that orderly heading hierarchies are associated with 2.8× higher citation likelihood.

When a page follows a clean H1–H2–H3 structure, models can rapidly identify the topic, key sections, and information hierarchy. By contrast, pages with multiple H1s or skipped levels (e.g., jumping from H1 directly to H3) require more computational effort to interpret, reducing citation probability.

Schema Markup as a Relevance Signal

Rich schema markup—particularly FAQ and Q&A schema—provides strong relevance signals to both search engines and LLMs. Pages using FAQ schema are 40% more likely to be cited by AI.

When FAQ schema is present, models can directly map structured answers to user queries. Pages that implement three or more schema types show a further 13% increase in citation likelihood.

Lists and Readability

Breaking dense text into lists and scannable blocks improves both human readability and LLM extraction efficiency. Data shows that nearly 80% of ChatGPT-cited pages use list-based structures, compared to only 29% among Google’s traditional top-ranking results.

Signal 3: Off-Site Credibility Builds the Trust Layer

This is perhaps the most counterintuitive—and most important—finding in GEO:

the authority of a brand’s own website is becoming less important in AI search. In its place, communities and user-generated content (UGC) are emerging as a stronger trust layer.

The Overwhelming Advantage of Third-Party Sources

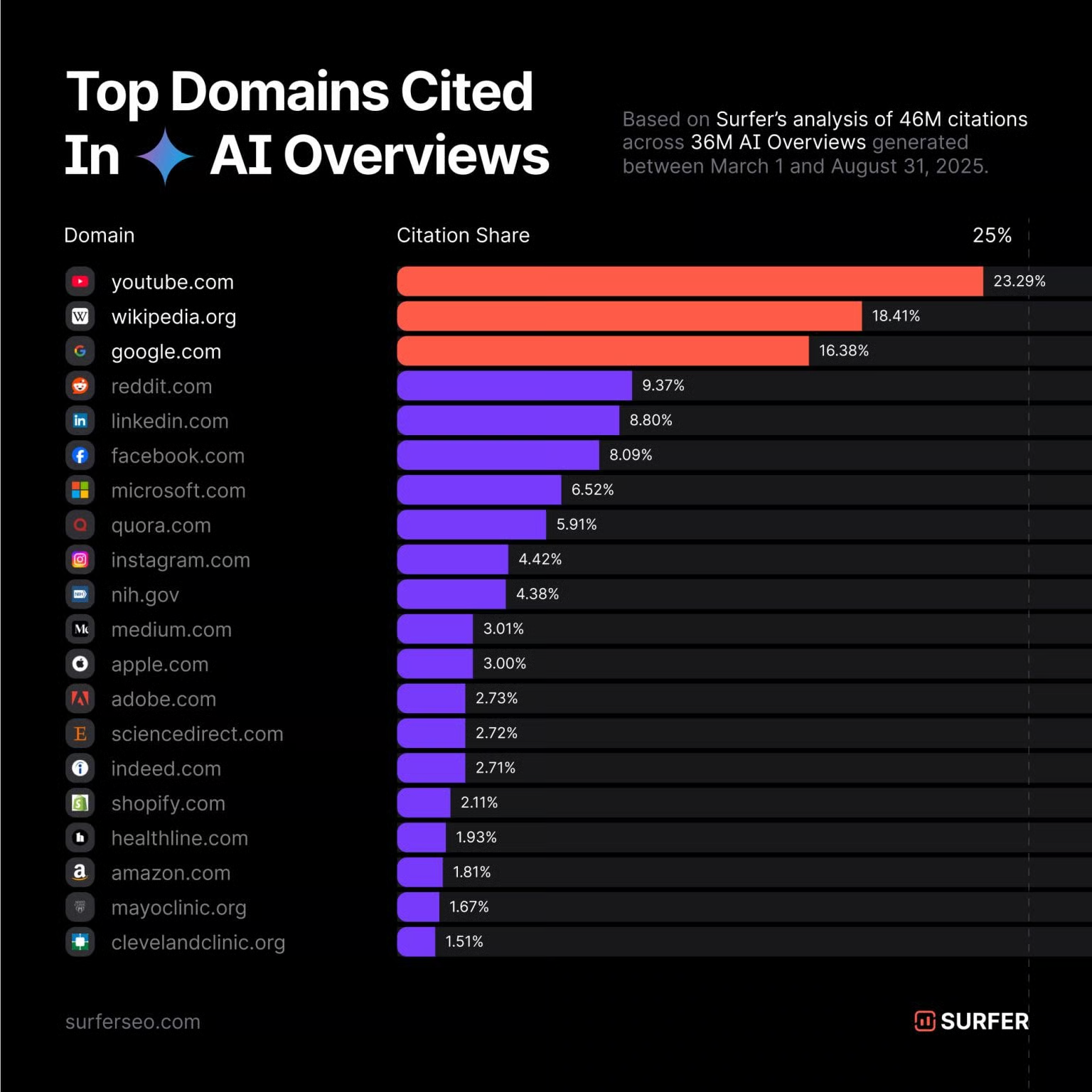

AirOps analyzed data from over 21,000 brands and uncovered a striking result:

85% of brand mentions in AI-generated answers come from third-party sources rather than brand-owned websites.

Even more telling, brands are 6.5× more likely to be cited through third-party content than through their own domains.

In practical terms, this means a perfectly crafted article on your website—produced over months—may be less valuable than a 10-minute Reddit comment written by a real user. Domain authority is no longer the deciding factor. What matters is how the broader web talks about you.

Nearly 90% of third-party brand mentions come from lists, comparison pages, and review roundups. AI models recognize that when multiple independent sites list a product as “one of the best,” it represents a powerful consensus signal.

The Power of Community Discussions

Approximately 48% of AI search citations originate from community platforms such as Reddit and YouTube. This is not because these platforms host the most authoritative content, but because they represent authentic, unfiltered user discussions and feedback.

Reddit alone appears as a cited source in roughly 22% of generated answers.

AI models have learned a simple truth: when real users openly discuss and recommend a product, it is far more credible than a brand claiming, “We’re great,” on its own website.

Why Traditional SEO Strategies Fail in Generative Engines

Having understood the three core GEO signals, we can now clearly see why many traditional SEO strategies not only fail in generative engines, but can actually be harmful.

The End of Keyword Stuffing

Keyword stuffing was once a central SEO tactic. By repeatedly inserting target keywords into content, site owners could signal to search engines that “this page is highly relevant to this query.” In an era of relatively simple algorithms, this approach was often effective.

In generative engines, however, the situation is fundamentally different.

Experimental data from GEO research shows that keyword stuffing has little to no positive effect in generative search—and in many cases, it reduces visibility. This is not because language models fail to understand keywords, but because they understand them too well.

LLMs interpret content through semantics and context rather than keyword matching. When excessive keywords are inserted, models recognize the hallmarks of low-quality content: unnatural language patterns, repetition, and forced phrasing. These signals are strongly associated with cheap optimization tactics and directly lower a model’s assessment of content quality and citation likelihood.

AI models are explicitly trained to detect and filter spam, and keyword stuffing is one of the most obvious indicators of spam-like content.

The Relative Decline of Domain Authority

This shift is even more profound, as it signals a fundamental reordering of the search ecosystem itself.

In the SEO era, domain authority was the foundation of everything. Once established, it was difficult to displace. Large websites benefited from high authority, allowing them to rank well even with mediocre content, while smaller sites—despite producing higher-quality material—struggled to compete. This created a classic “winner-takes-all” environment.

In AI-driven search, this dynamic is being reversed.

Brands are 6.5× more likely to be cited through third-party sources than through their own websites, directly challenging the centrality of domain authority. Authority is no longer determined by the strength of your domain alone, but by how the broader web discusses and references your brand.

This transition has far-reaching implications for every participant in the search ecosystem.

The Democratizing Effect of GEO: Lower-Ranked Sites Benefit the Most

If the decline of domain authority is bad news for large websites, then GEO is exceptionally good news for smaller ones—representing a shift unlike anything seen before.

GEO research compared the impact of GEO optimization across sites at different ranking positions:

- Sites ranked #5 experienced a 115% increase in visibility after applying GEO strategies

- Sites ranked #1, using the same methods, saw visibility decline by up to 30%

Traditional SEO, driven by backlink volume and domain authority, tends to reinforce already dominant players. GEO, by contrast, prioritizes content quality, freshness, structural clarity, and community validation—creating a genuine opportunity for small and mid-sized websites to catch up.

Smaller competitors no longer need to outspend large brands on link-building campaigns. They simply need to create better content.

This is true democratization.

Reallocating Resources: The Shift from SEO to GEO + SEO

With a clear understanding of how GEO works, a practical question emerges: how should limited resources be allocated? This is not a binary choice between SEO and GEO, but a matter of reprioritization.

Resource Allocation: Then and Now

The Old SEO Model:

- 80% link building and domain authority growth

- 15% technical optimization

- 5% content quality

This allocation reflects a belief that rankings are primarily driven by link authority, justifying the concentration of resources in that area.

The New GEO + SEO Model:

- 40% content freshness and quality (serving both SEO and GEO)

- 25% structured content and interpretability (serving both SEO and GEO)

- 15% third-party engagement and community building (primarily for GEO)

- 15% technical optimization and link building (primarily for SEO)

- 5% AI visibility monitoring (a new GEO-specific function)

The Logic Behind the Reallocation

First, content freshness and structured presentation are critical to both SEO and GEO, making them the highest priorities. Freshness supports SEO by signaling active site maintenance, and GEO by ensuring informational accuracy. Structured content benefits SEO by improving crawlability, and GEO by enabling LLMs to quickly parse and extract key information.

Second, third-party engagement primarily supports GEO, as it directly influences the likelihood of brand citations—by a factor of 6.5×.

Finally, technical optimization and link building remain important, but their relative priority declines. This is not because they no longer matter, but because within the GEO framework their impact is diminished. Even sites with imperfect technical foundations can still earn AI citations if their content is sufficiently high-quality, up-to-date, and actively discussed across the web.

Conclusion: The Beginning of a New Era

A paradigm shift in search has already happened.

This is not a distant future scenario—it is a reality unfolding right now in 2025–2026.

The way users access information is undergoing a fundamental transformation.

They are no longer simply clicking through search result pages; instead, they are increasingly reading answers generated directly by AI. Search is evolving from link distribution to answer generation.

In this context, GEO (Generative Engine Optimization) is fundamentally about rebuilding the connection between brands and users in the age of generative engines.

Traditional SEO connects users to ranked lists of websites.

GEO connects users directly to information itself.

As generative engines become the primary gateway for information discovery, the latter clearly aligns more closely with real user behavior.

For brands targeting global markets, GEO represents a rare opportunity to overtake competitors on the curve.

While most players are still locked into traditional SEO content production models, brands that adopt GEO strategies early are already gaining disproportionate visibility and mindshare within generative engines.

This is not merely a technical evolution—it is a cognitive upgrade.

The future is already here; it is just unevenly distributed.

Now is the time to act.

As a data provider, the motivation behind developing LLM Chat Scraper came from a deceptively simple question we were repeatedly asked:

“How can we capture the real responses users receive when interacting with large models such as ChatGPT, Gemini, or Perplexity?”

Official APIs cannot fully replicate real user conversations, while manual testing—though intuitive—cannot be scaled or systematized for serious research.

To address this gap, we built LLM Chat Scraper, a tool designed to analyze AI visibility.

It captures complete front-end responses from generative engines—including full answers and citations—without requiring login and without exposing conversation context.

This enables teams to truly understand how their brands and content are presented and cited within generative engines.

Currently, LLM Chat Scraper supports front-end data collection and analysis from the following LLM chat platforms:

- ChatGPT

- Perplexity

- Microsoft Copilot

- Google Gemini

- Google AI Mode

- Grok

We charge only for successfully scraped results, with pricing as low as $1 per 1,000 records—approximately one-tenth the cost of official APIs.

If you’re interested, feel free to contact us to request a free trial.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.