Cloudflare Error 1015: What Is It and How to Avoid

Advanced Bot Mitigation Engineer

About 20% of websites deploy Cloudflare. They protect millions of websites and services from various user attacks, but this also causes many website owners and visitors to frequently receive Cloudflare Error 1015.

For developers, especially web scrapers, it can be very frustrating. Error 1015 blocks access to the target website until the rate limit period expires, resulting in interrupted data collection.

Therefore, in this article, we will discuss how to avoid Cloudflare Error 1015, revealing the best tools and strategies to help you solve it. But before concluding, let's first understand what this error is and why it is triggered.

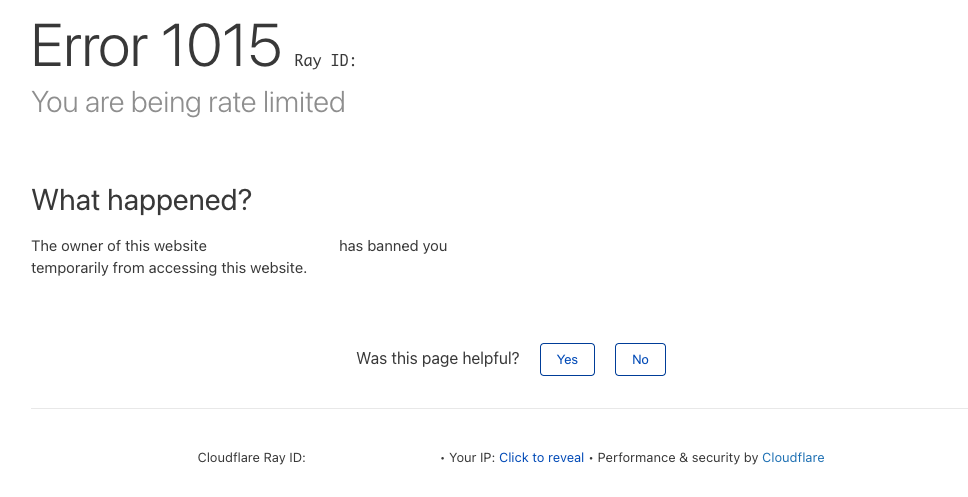

What is Cloudflare Error 1015?

Cloudflare Error 1015, commonly known as "error 1015 rate limited," is an error that occurs when Cloudflare’s rate limiting rules are triggered. This error is designed to protect websites from excessive traffic or potential attacks by temporarily blocking access to the site.

4 Causes of Cloudflare Error 1015

The main cause of Error 1015 is exceeding the rate limit set for a specific IP address. This can happen in a number of situations:

- High traffic: Users or automated scripts making a large number of requests to a website in a short period of time can trigger rate limits. This is often seen during web scraping or running automated bots.

- DDoS protection: To prevent distributed denial of service (DDoS) attacks, Cloudflare uses rate limiting as a defense mechanism. If an IP address is making requests at a rate similar to a DDoS attack, it may be temporarily blocked.

- Application misconfiguration: Sometimes, legitimate applications can be misconfigured and inadvertently make too many requests. For example, a poorly designed API client may repeatedly request data in a loop.

- Shared IP addresses: Users behind a shared IP address (such as those in a corporate network or using a VPN) may collectively exceed rate limits even if individual usage is within acceptable limits.

What Is the Duration of a Rate Limit by Cloudflare?

The duration of a rate limit ban imposed by Cloudflare can vary significantly. Website owners who utilize Cloudflare can set the ban duration anywhere from 10 seconds to 24 hours. For those using the free or pro plans, the maximum duration they can impose is 1 hour.

Regarding the Cloudflare API, there is a global rate limit of 1,200 requests per user every 5 minutes. If this limit is exceeded, all subsequent API calls will be blocked for the next 5 minutes.

How to Avoid Cloudflare Error 1015

Cloudflare Error 1015: "You are being rate limited" when encountering this, in fact, there are some ways to effectively solve the problem, we will introduce some of the key ways below!

1. Scrapeless Web Unlocker

Implement specialized web unlocker solutions designed to bypass Cloudflare's security measures effectively. Scrapeless web unlocker leverages advanced techniques to bypass CAPTCHA challenges and other blocking mechanisms, ensuring uninterrupted access to protected websites.

Scrapeless is an expandable suite of tools including web unlocker, proxies, captcha solver, headless browser, and anti-bot Solutions - designed to work together or independently

Here you can find the detailed unlocking steps:

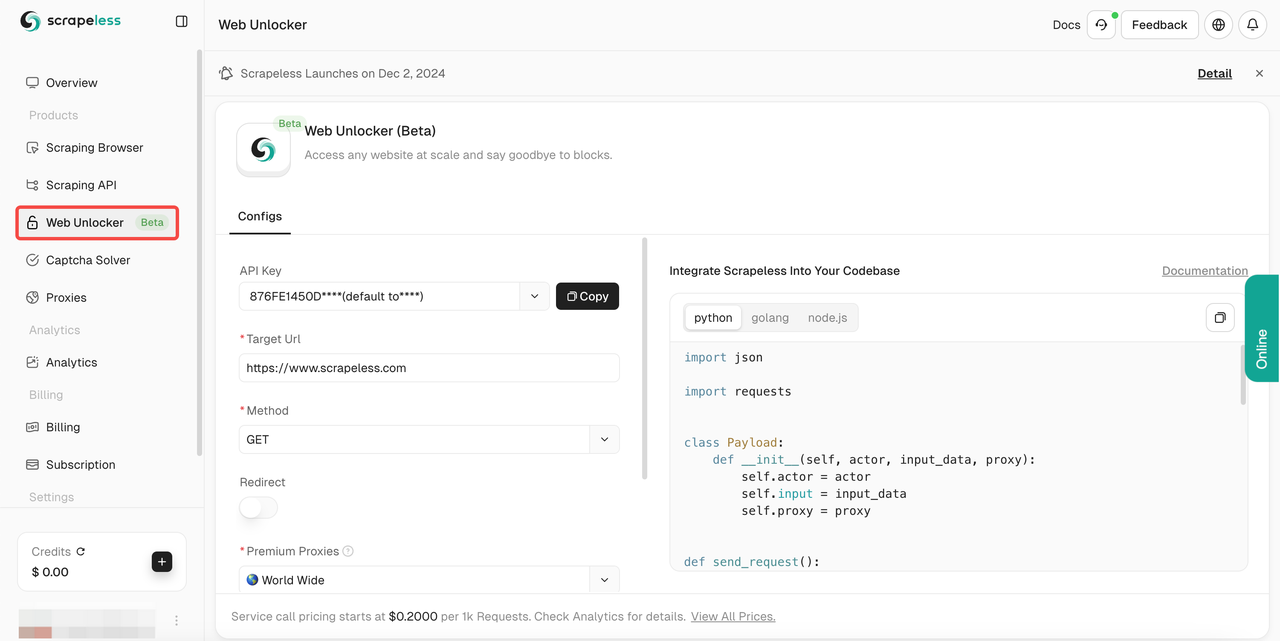

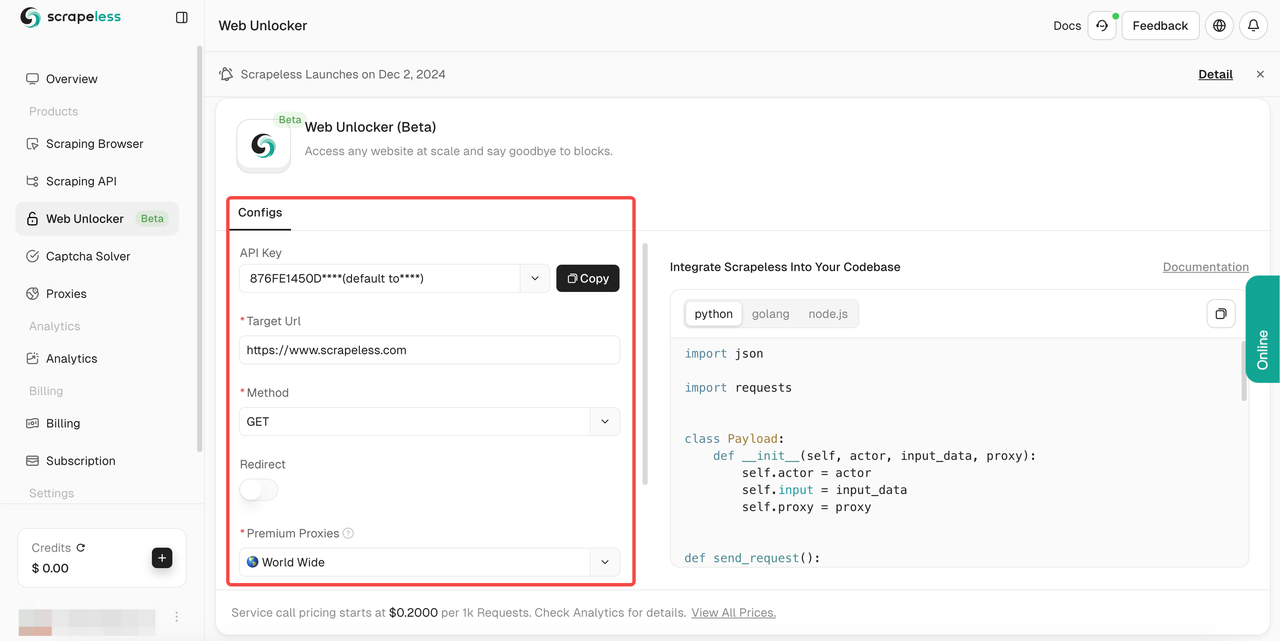

- Step 1. Log in to Scrapeless

- Step 2. Click the "Web Unlocker"

- Step 3. Configure the operation panel on the left according to your needs:

- Step 4. After filling in your

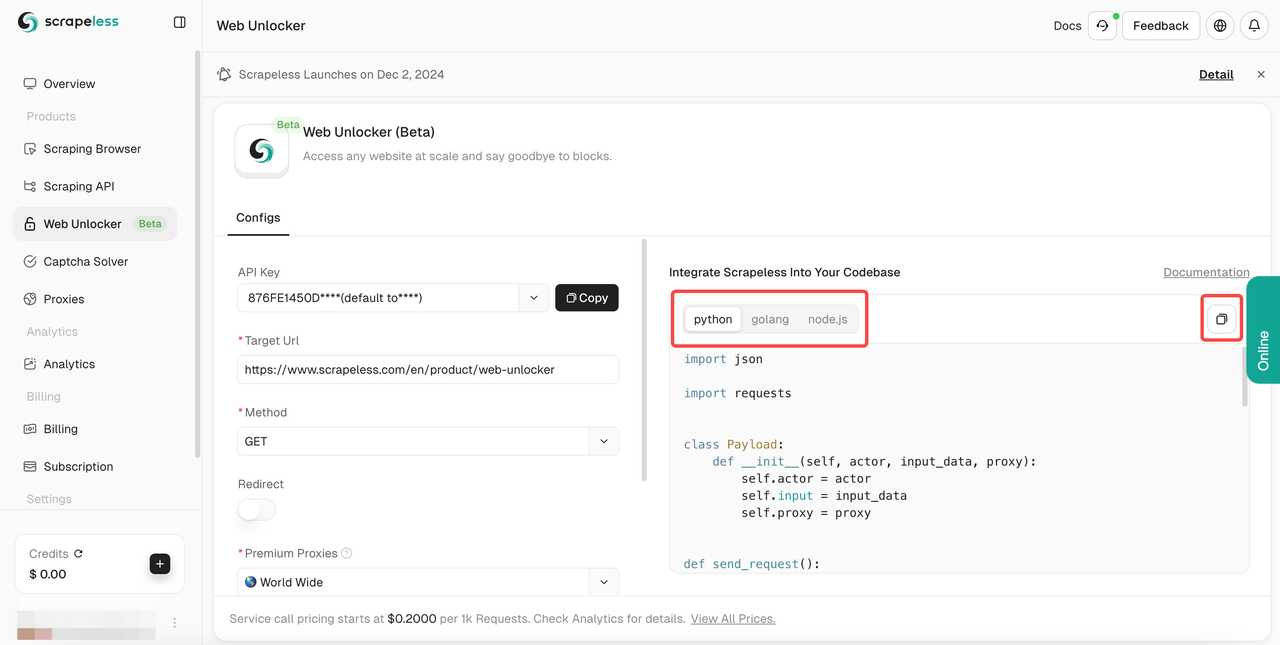

target url, Scrapeless will automatically crawl the content for you. You can see the crawling results in the result display box on the right. Please select the language you need:Python,Golang, ornode.js, and finally click the logo in the upper right corner to copy the result.

If you want to integrate Scrapeless web unlock into your project, please follow our sample codes as a reference:

Python

import requests

import json

API_KEY = ""

host = "api.scrapeless.com"

url = f"https://{host}/api/v1/unlocker/request"

payload = json.dumps({

"actor": "unlocker.webunlocker",

"input": {

"url": "https://httpbin.io/get",

"redirect": False,

"method": "GET",

}

"proxy":{

"country": "ANY",

}

})

headers = {

'Content-Type': 'application/json',

'x-api-token': f'{API_KEY}'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)2. Use Premium Proxies

A proxy server acts as an intermediary between your system and the target website, helping to distribute traffic and avoid detection, such as Cloudflare Error 1015. By routing your requests through multiple proxies, you can spread the load across different IP addresses, making your scraping activities appear more like genuine user traffic.

To circumvent issues commonly encountered with free proxies, which are often blocked due to being hosted on shared data centers, opting for premium proxies is advisable. Premium proxies, especially residential ones, offer IP addresses associated with real residential locations, making them less likely to be flagged and blocked by websites.

Additionally, staying updated on proxy performance metrics and rotating proxies regularly can further optimize your scraping operations. This proactive approach ensures consistent access to target websites while maintaining compliance with their policies.

Frustrated to be blocked by websites?

Scrapeless rotating proxies help a lot to avoid IP blocking!

3. Rotate Headers

Rotating headers is a valuable tactic for web scraping. By altering the headers accompanying each request, you can simulate genuine user behavior, thereby lowering the risk of detection and subsequent blocking by Cloudflare or other security measures.

This method involves regularly changing user-agent strings, request methods, and other header parameters. This variability helps disguise your scraping activities as typical browsing behavior, making it harder for websites to distinguish between automated and human traffic.

Moreover, rotating headers can enhance the longevity of your scraping efforts. Websites often track and block repetitive or predictable requests. By continuously refreshing headers, you avoid patterns that trigger alarms, ensuring uninterrupted access to desired data.

4. Reduce Number of Requests

Cloudflare Error 1015 is triggered when the system detects an unusually high number of requests from a single IP address, leading to a temporary ban. To lower the chances of encountering this error, it’s crucial to limit the number of requests you make within a specific time frame.

Introducing delays between requests is an effective strategy to manage request frequency. Implementing exponential backoff, where the delay increases with each subsequent failed request, can make your scraping behavior appear more human-like.

However, merely reducing request volume might not be enough, as Cloudflare uses various security mechanisms beyond rate limiting. This is where alternative approaches, such as utilizing proxies, become essential.

Ending Thoughts

Solving Cloudflare Error 1015 can be like navigating a digital minefield, but with the right tools and strategies, you can effectively overcome these challenges.

From understanding the intricacies of rate limiting to deploying advanced proxies and optimizing request patterns, there are a number of ways to mitigate the effects of this error.

Scrapeless web unlocker is the most effective way to avoid cloudflare 1015. CAPTCHA solver and rotating proxy will help a lot!

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.