How to Build an Intelligent Business News Monitor on Dify?

Advanced Data Extraction Specialist

In today’s highly competitive landscape, staying informed of brand reputation, industry developments, and competitor intelligence in real time is crucial for effective decision-making. However, manually monitoring news and information is time-consuming, labor-intensive, and prone to missing critical insights.

This solution integrates Dify, a leading no-code AI automation platform, with the Scrapeless Deep SerpApi, an enterprise-grade Google Search data interface, to build a smart and scalable business news monitoring system that enables enterprises to:

- Collect and filter real-time news automatically

- Leverage AI for intelligent analysis and actionable insights

- Push alerts and reports across multiple channels automatically

1. Solution Overview

| Component | Description |

|---|---|

| Dify Intelligent Workflow Platform | No-code workflow design and execution with drag-and-drop support for AI and API integration |

| Scrapeless Deep SerpApi | High-speed, stable, anti-blocking Google Search API supporting multi-region and multilingual queries |

| AI Models (e.g., GPT-4 / Claude) | Performs automatic semantic analysis and generates intelligent news summaries and business insights |

| Notification Plugins (e.g., Discord Webhook) | Real-time push of monitoring reports to ensure rapid information delivery |

2. Enterprise-Grade Tooling Overview

Dify Intelligent Workflow Platform

A no-code AI automation platform designed for flexible, enterprise-grade workflows

- Visual interface for drag-and-drop workflow building—no coding required

- Seamless integration with mainstream AI models (GPT-4, Claude 3, Gemini, etc.)

- Plugin ecosystem for connecting with APIs and external data sources

- Real-time monitoring with detailed logs and error tracing

- Role-based access control and team collaboration support

- Suitable for private deployment in secure enterprise environments

Scrapeless Deep SerpApi

A real-time, high-fidelity Google SERP API engineered for AI workflows and business intelligence

Scrapeless Deep SerpApi is purpose-built for enterprise-grade use cases like brand monitoring, market intelligence, content generation, and AI-powered decision-making. It extracts real-time, structured data directly from Google search results (HTML parsing), ensuring accuracy, freshness, and reliability.

Key Advantages

- Instant access to real-time Google SERP data (under 3s response)

- Comprehensive result coverage: organic results, google local, google image, google news etc.

- Zero caching: Direct HTML parsing ensures up-to-date, verifiable results

- Anti-scraping technology: 99.9% success rate, no manual proxy configuration needed

- Supports 195+ countries and multiple languages for global monitoring

- Structured output in common data formats, making it easy for AI models and automated workflows to parse and analyze

- Transparent, usage-based billing with no hidden limits or field restrictions

📌 Ideal for:

- Building enterprise-grade media monitoring and alert systems

- Tracking competitor activity and market trends globally

- Creating search-tuned datasets for retrieval-augmented generation (RAG)

- Powering SEO and content automation at scale

3. Environment Setup & Account Registration

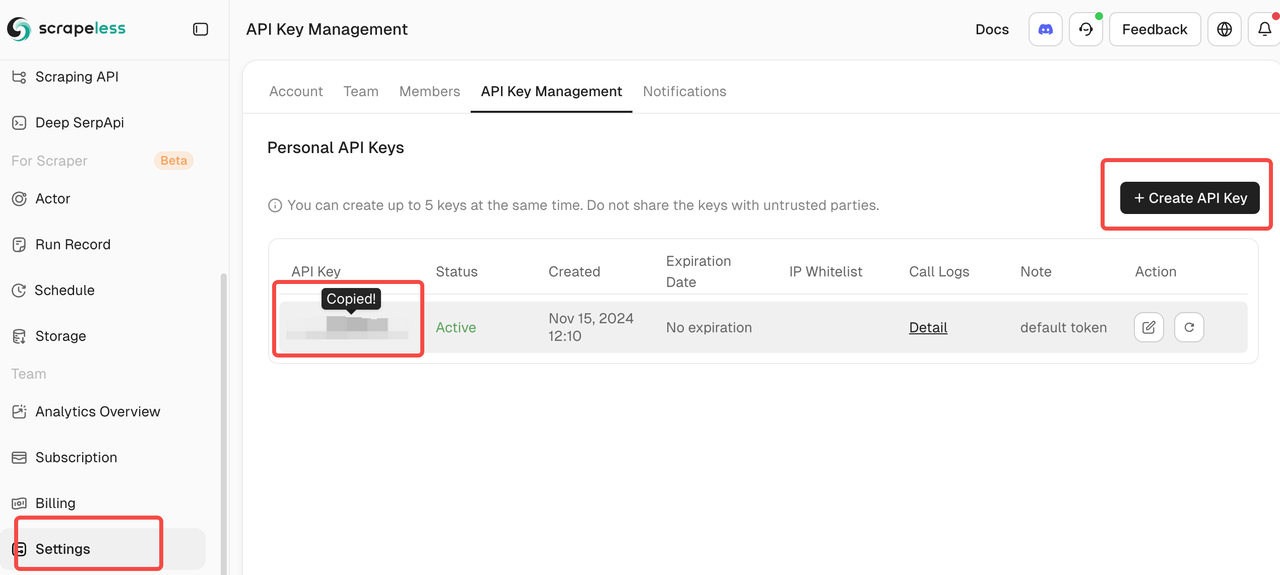

3.1 Register a Scrapeless Account and Obtain API Token

- Visit the Scrapeless Dashboard

- Register a business account

- After logging in, navigate to the API Management page to obtain your API token

⚠️ Important: Keep your API token secure and never share it publicly.

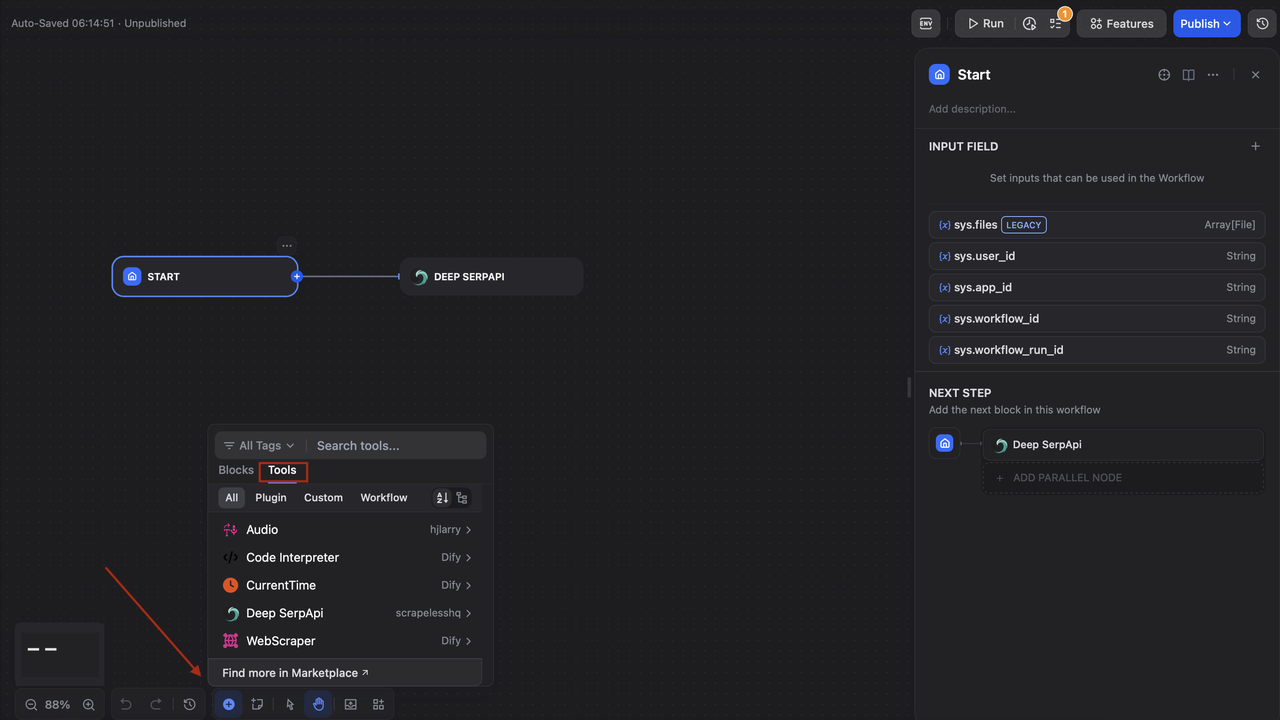

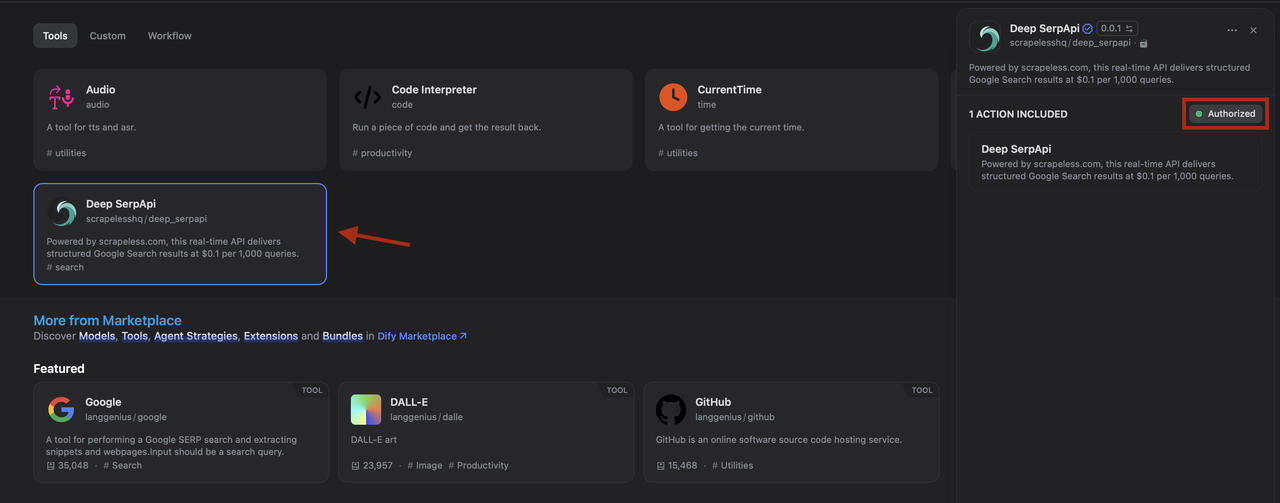

3.2 Register a Dify account and install the Deep SerpApi plugin

-

Sign up for Dify if you haven't already and install https://marketplace.dify.ai/plugins/scrapelesshq/deep_serpapi

-

Create a new application and select "Workflow"

-

In the workflow studio, click the "+" button to add a new tool

-

Navigate to the "Tools" tab in the panel

-

Look for "Deep SerpApi" by scrapelesshq (as shown in the tools list)

-

Click on "Deep SerpApi" to add it to your workflow

4. Detailed Configuration Process

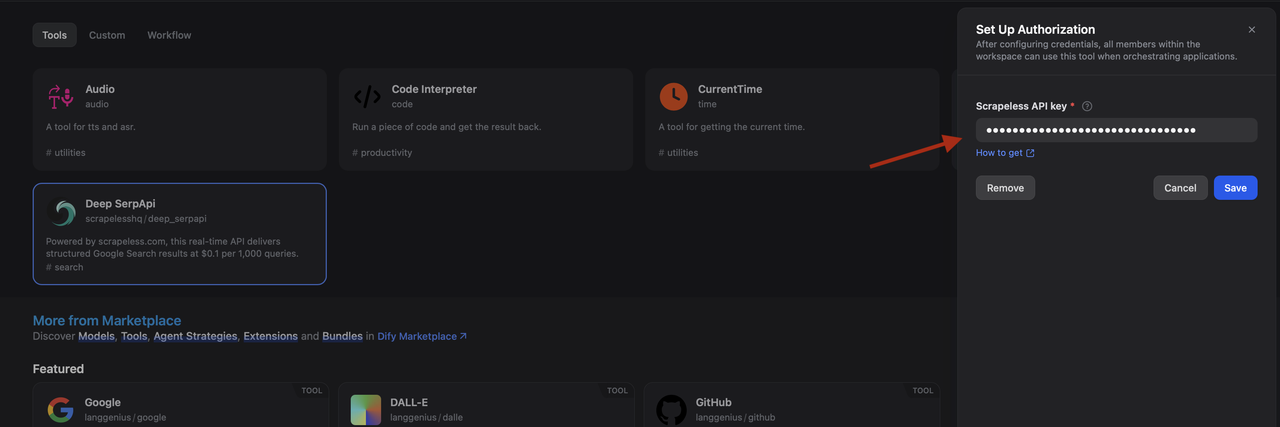

Step 1: Add the Deep SerpApi Node

- Click the "+" button in the workflow editor

- Select the Tools tab

- Choose Deep SerpApi (Scrapeless) and add it to your workflow

- In the configuration panel, paste the API Token copied earlier

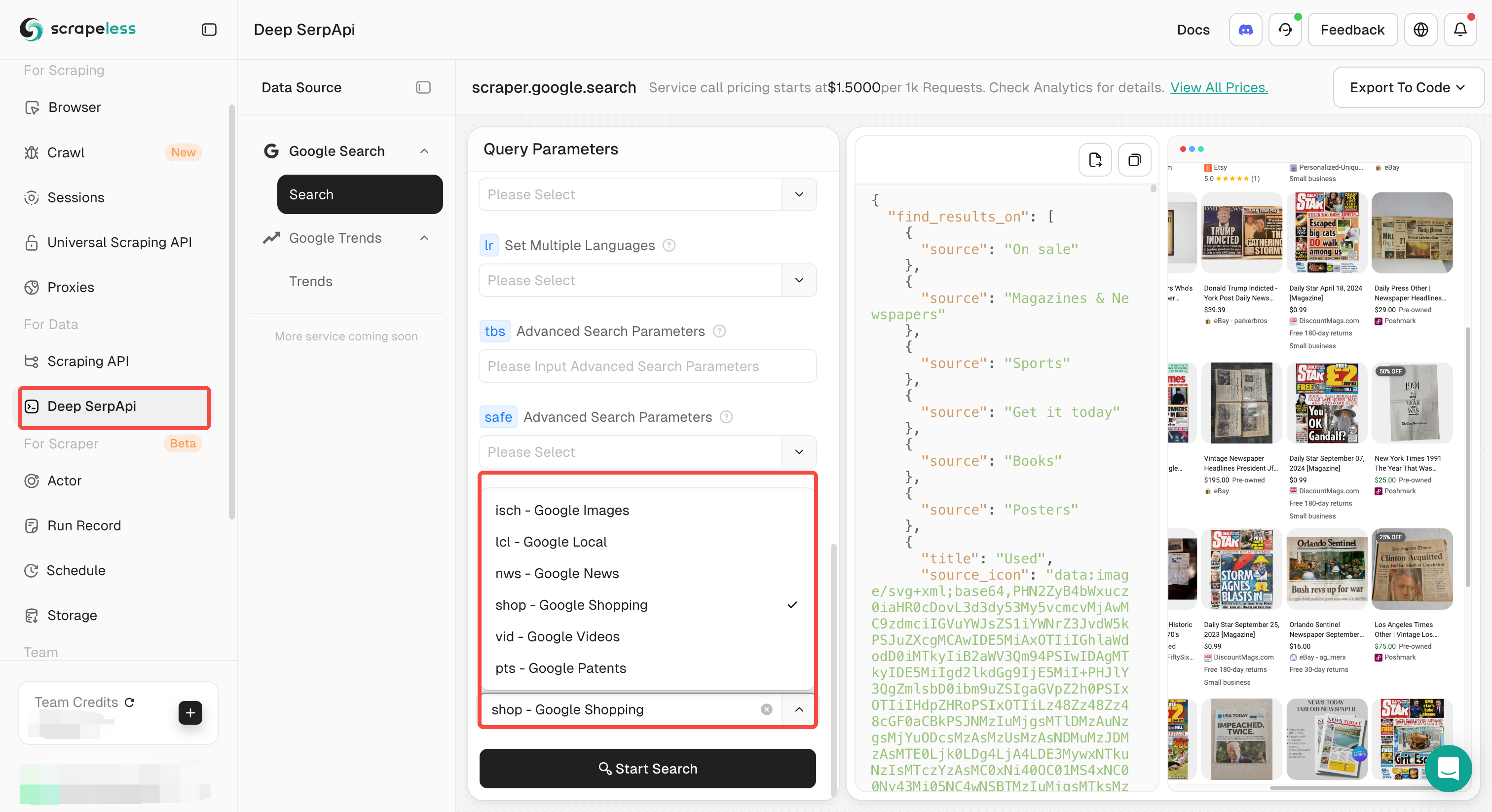

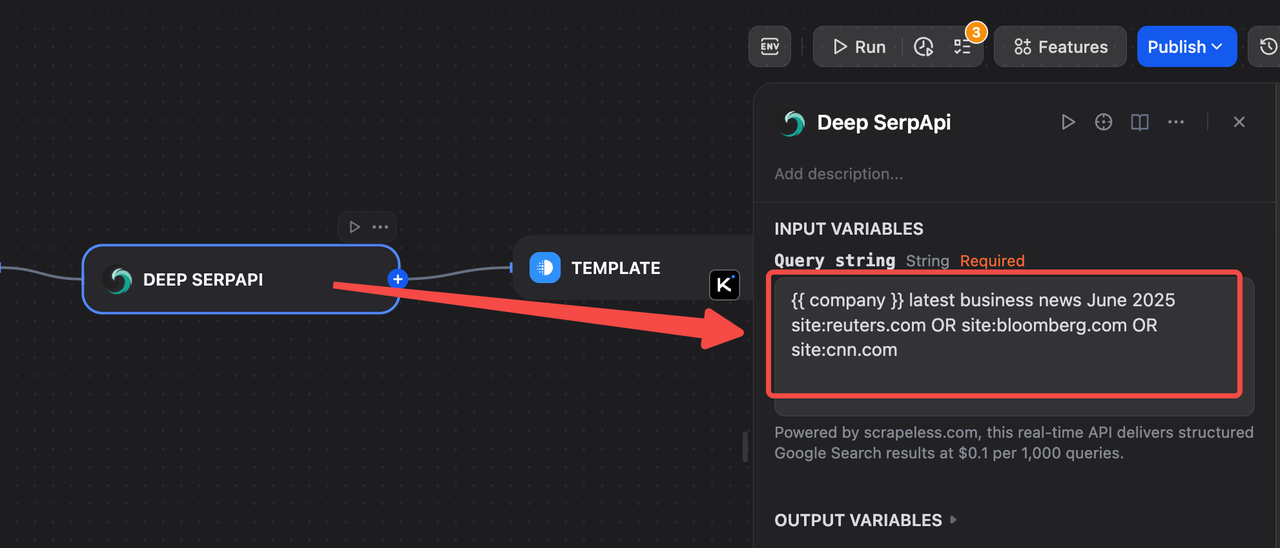

Step 2: Configure Search Parameters

- In the Query String field of the Deep SerpApi node, enter your search query, for example:

"Your Company Name" news

- Supports advanced search syntax such as:

"Your Company Name" OR "Industry Keyword""Company Name" AND (announcement OR partnership)

- In this example, we use:

{{ company }} latest business news June 2025 site:reuters.com OR site:bloomberg.com OR site:cnn.com

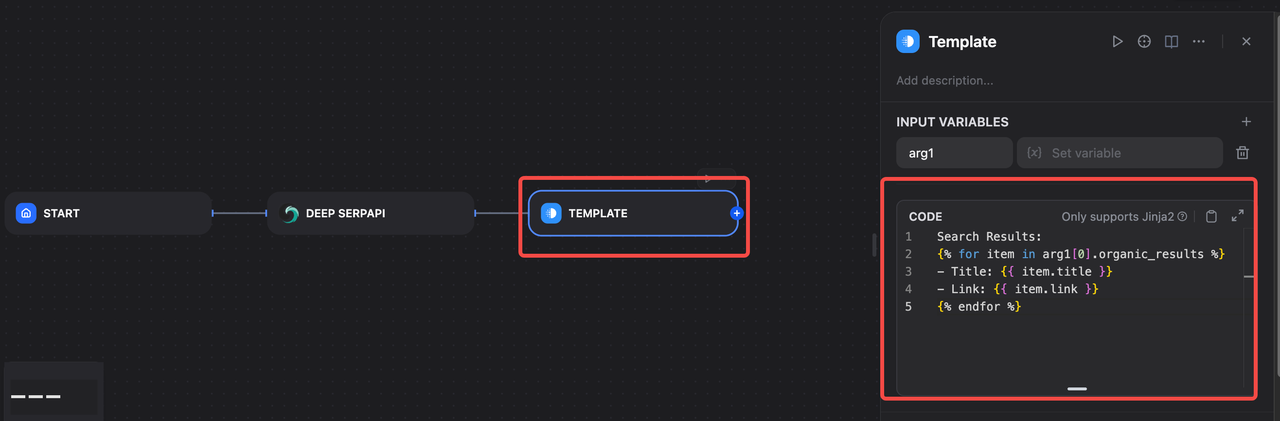

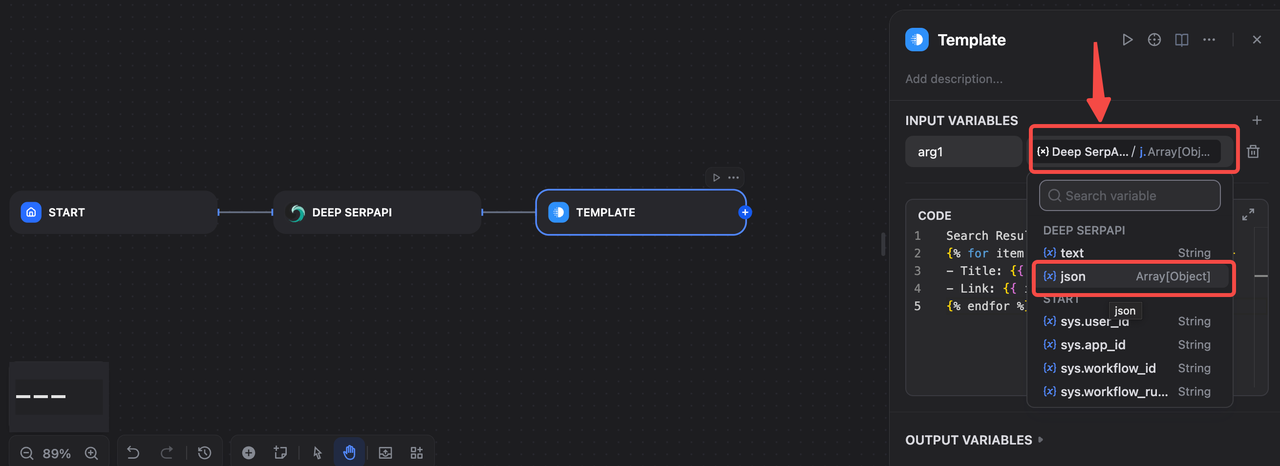

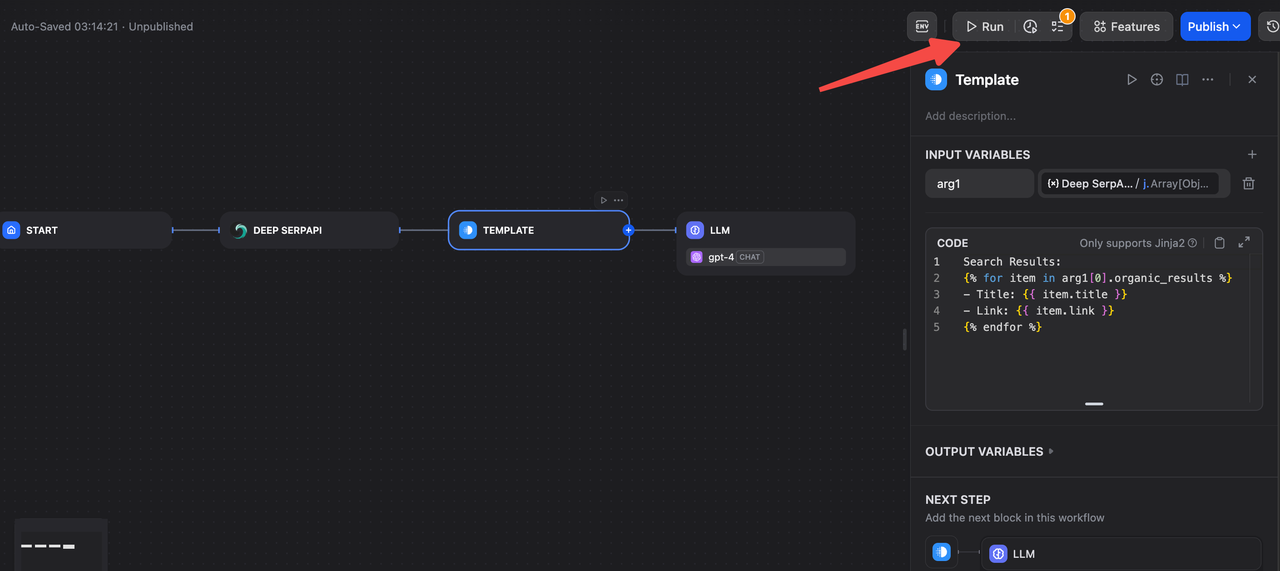

Step 3: Add Template Node to Format Search Results

- Click the “+” button after the Deep SerpApi node.

- Select “Template” from the available blocks.

- In the Template field, enter the following formatting template:

Search Results:

{% for item in arg1[0].organic_results %}

- Title: {{ item.title }}

- Link: {{ item.link }}

{% endfor %}- This template will display the search results in a structured manner to facilitate subsequent AI analysis.

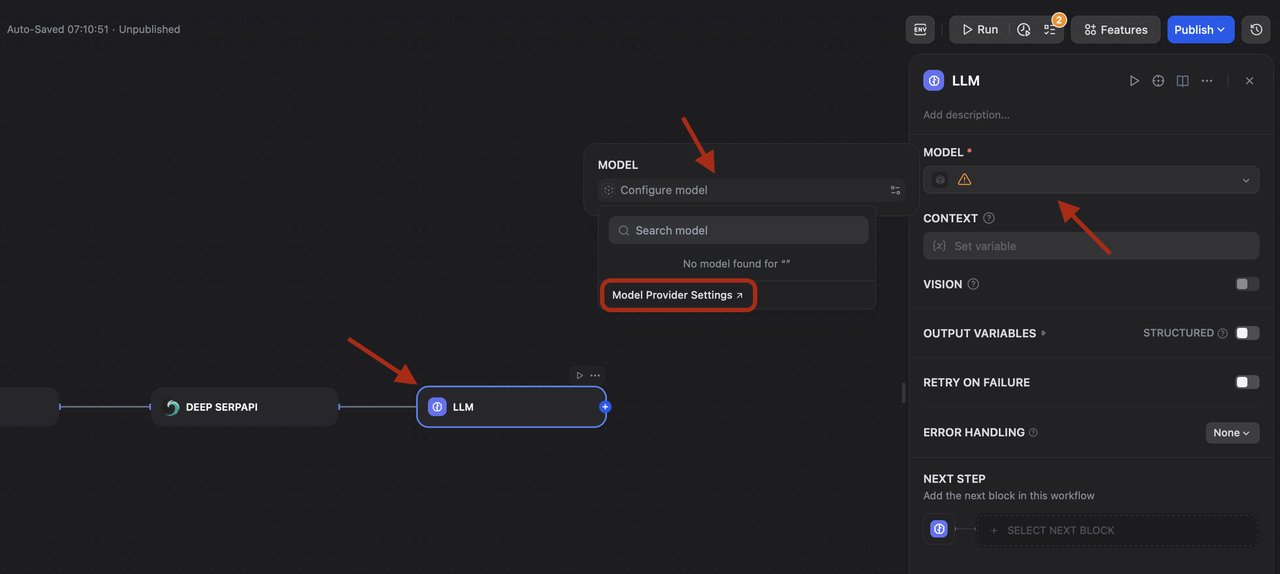

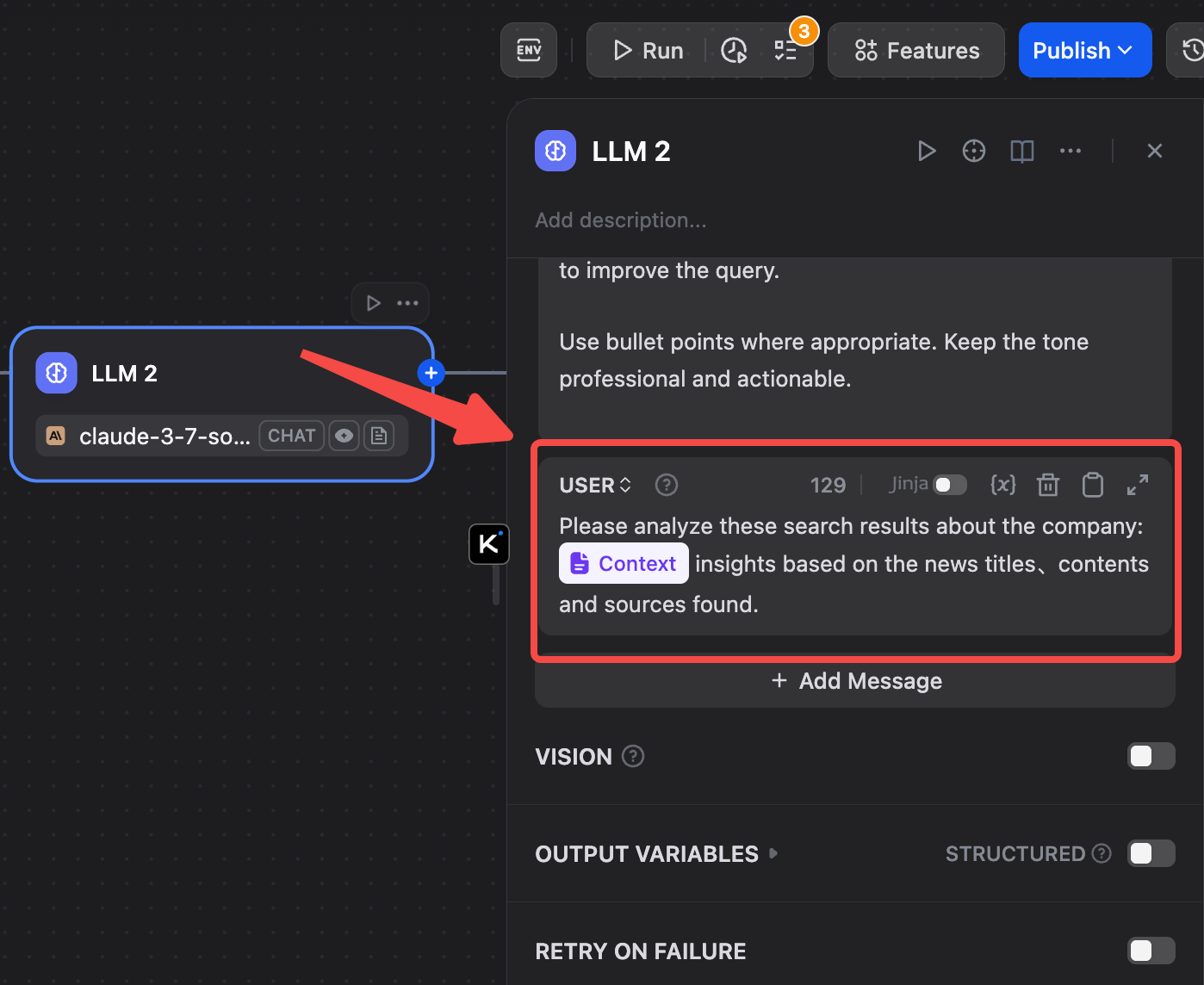

Step 4: Configure the AI Analysis Node

- Click the “+” button after the Deep SerpApi node.

- Select “LLM” from the available blocks.

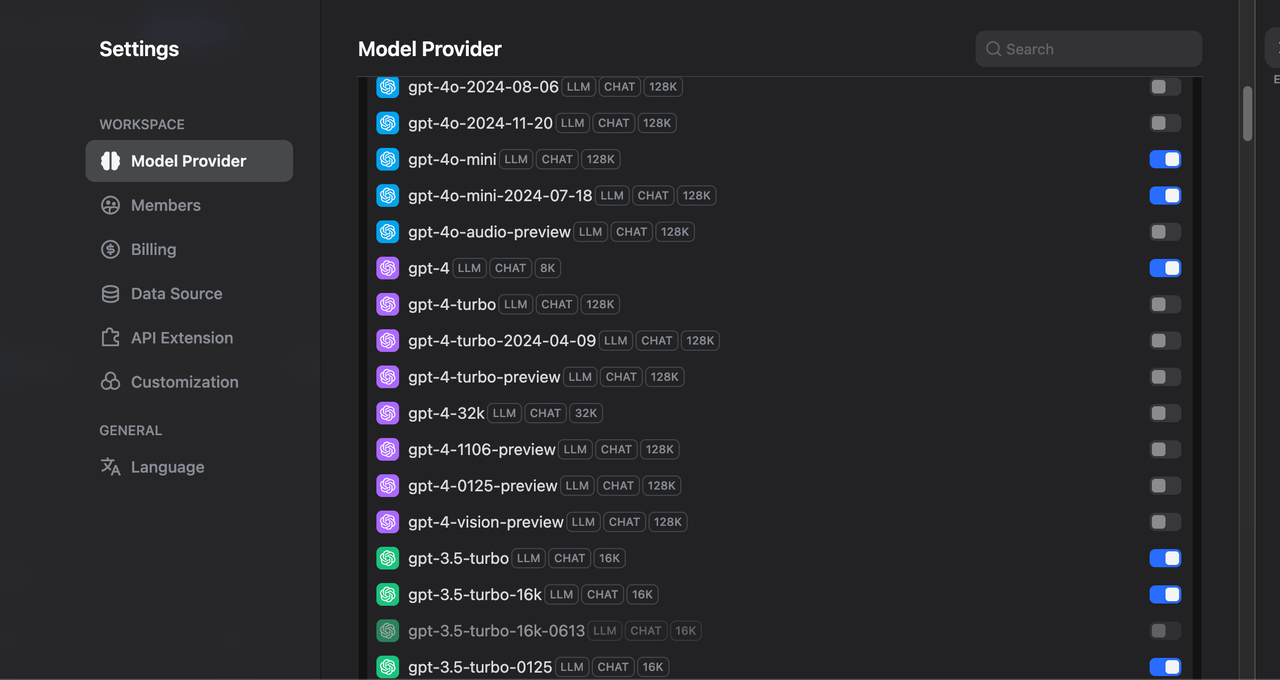

- Choose your preferred AI model (GPT-4 is recommended).

You will need to click “Model Provider Settings” to install or activate your model.

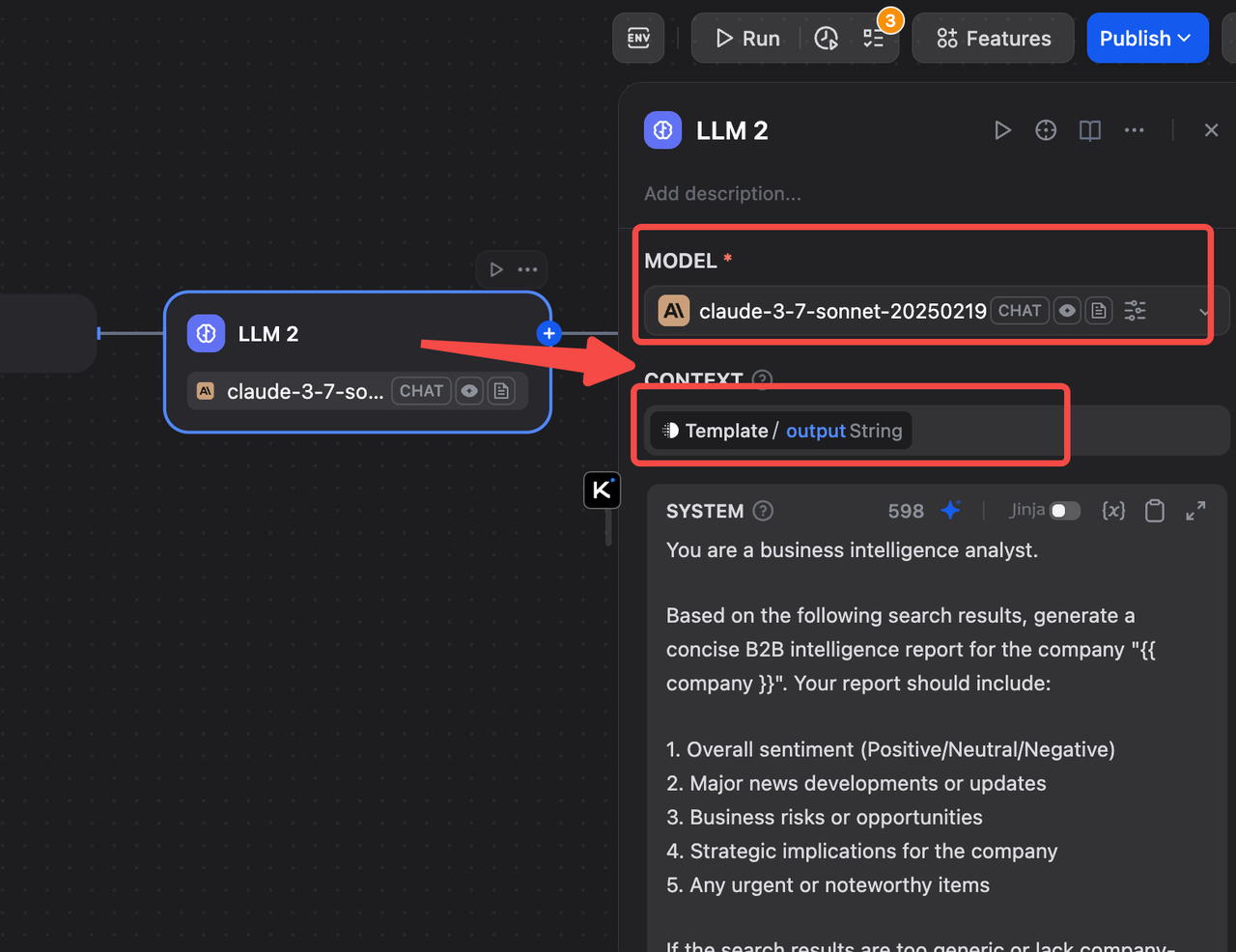

- You'll be taken to a page with a choice of LLM. You're free to choose the one you want. For our example, we'll use Claude.

- In the System prompt, reference the search results:

You are a business intelligence analyst.

Based on the following search results, generate a concise B2B intelligence report for the company "{{ company }}". Your report should include:

1. Overall sentiment (Positive/Neutral/Negative)

2. Major news developments or updates

3. Business risks or opportunities

4. Strategic implications for the company

5. Any urgent or noteworthy items

If the search results are too generic or lack company-specific content, please point that out and suggest how to improve the query.

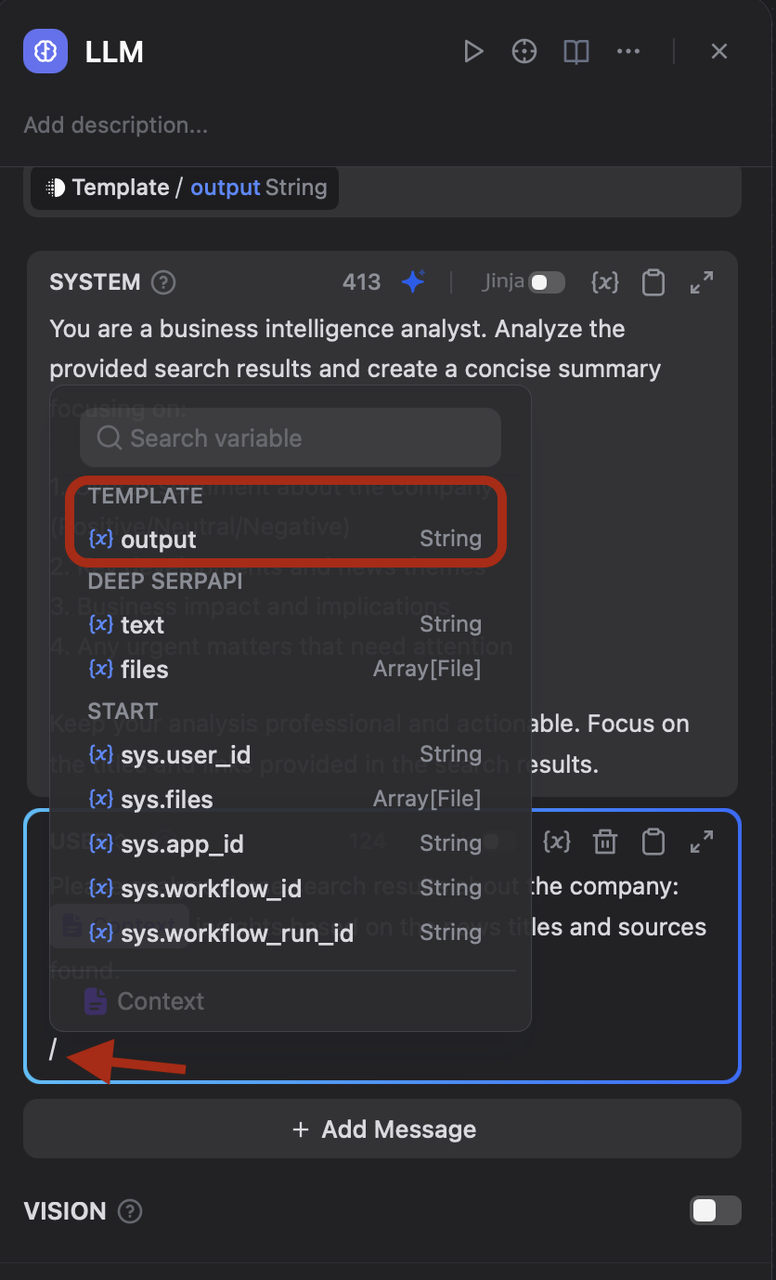

Use bullet points where appropriate. Keep the tone professional and actionable.- In the User prompt, reference the formatted template results:

Please analyze these search results about the company: insights based on the news titles、contents and sources found.- Then, in the Prompt text box, use / to call out the variable selector, and you can call out a list of variables, including output, text, sys., etc., for you to insert into the template or set variables. You can see the picture below.

Step 5: Run and Debug the Workflow

- Click the Run button in the top-right corner of the interface

- Wait for the workflow to execute and check the output results

- Based on the analysis results, adjust the search keywords and AI prompts to optimize performance

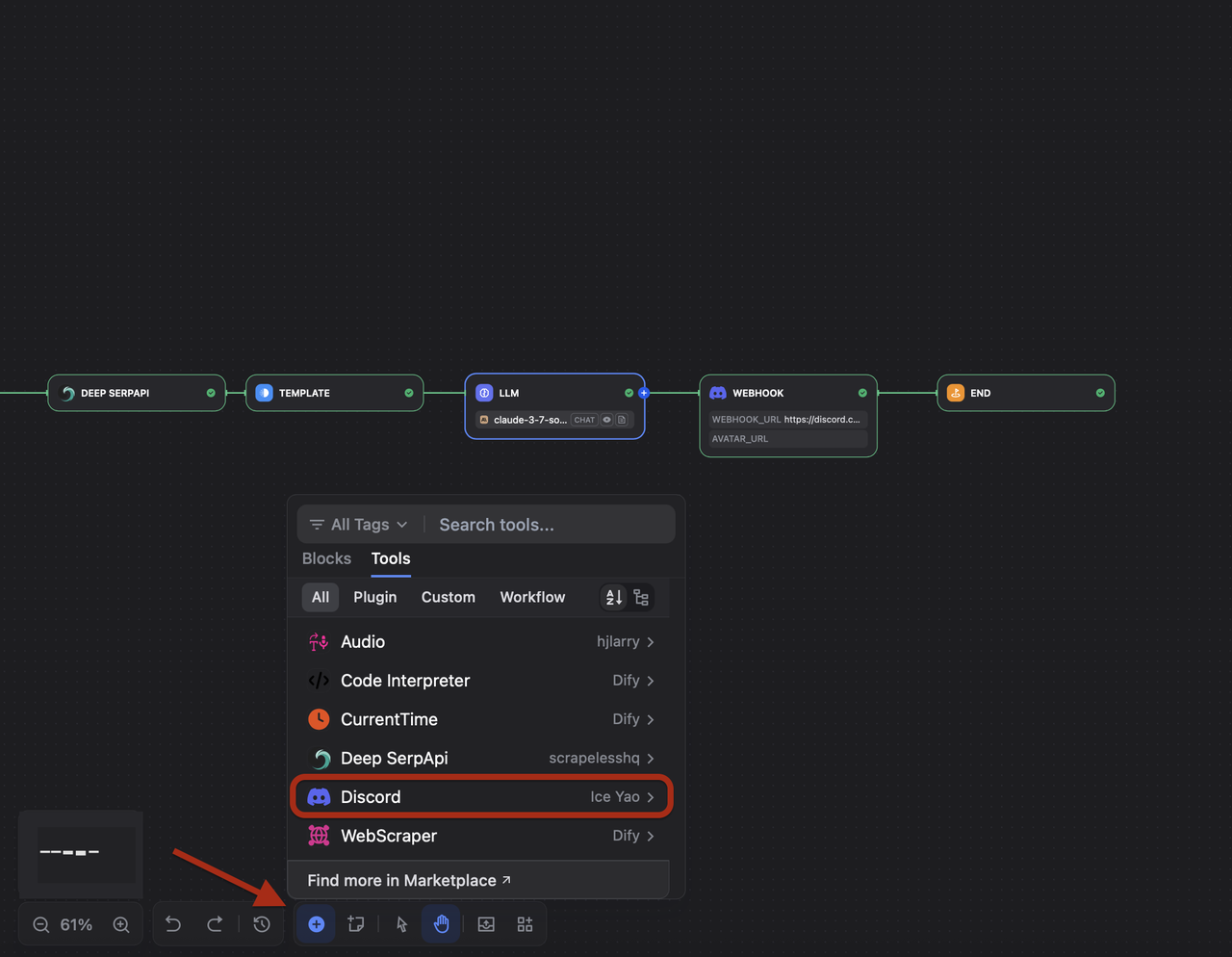

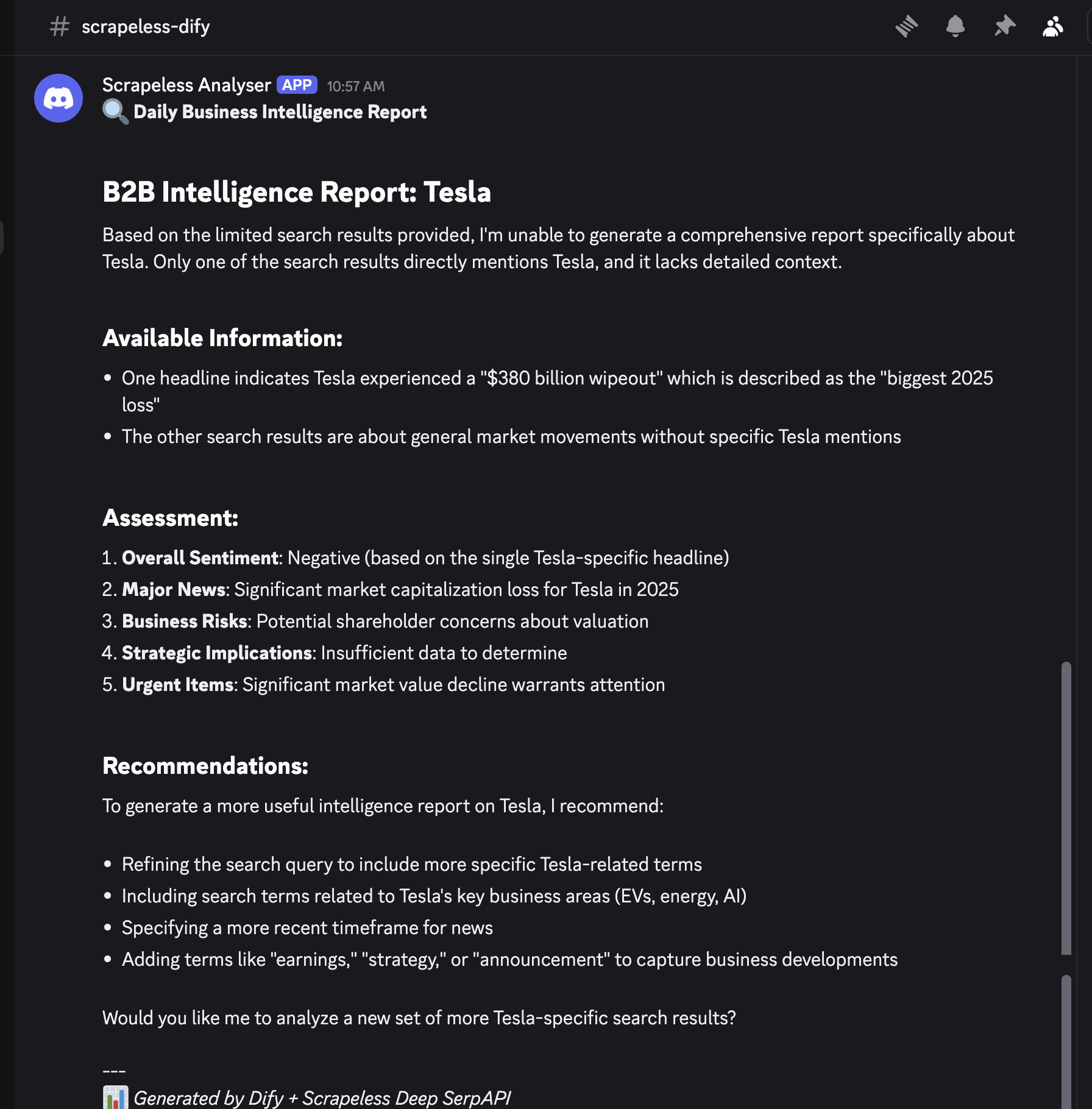

Step 6: Integrate Enterprise Notification Channels (e.g., Discord Webhook) (Optional)

To receive notifications directly in your Discord server when the workflow completes, you can add a webhook integration:

- Add a New Block:

- Click the “+” button after your LLM analysis step

- Select “Tools” from the block menu

- Find Discord Webhook in the Marketplace:

- In the Tools section, click on “Marketplace”

- Search for “Discord” or “webhook”

- Install the Discord webhook tool if it’s not already available Discord Plugin on Marketplace

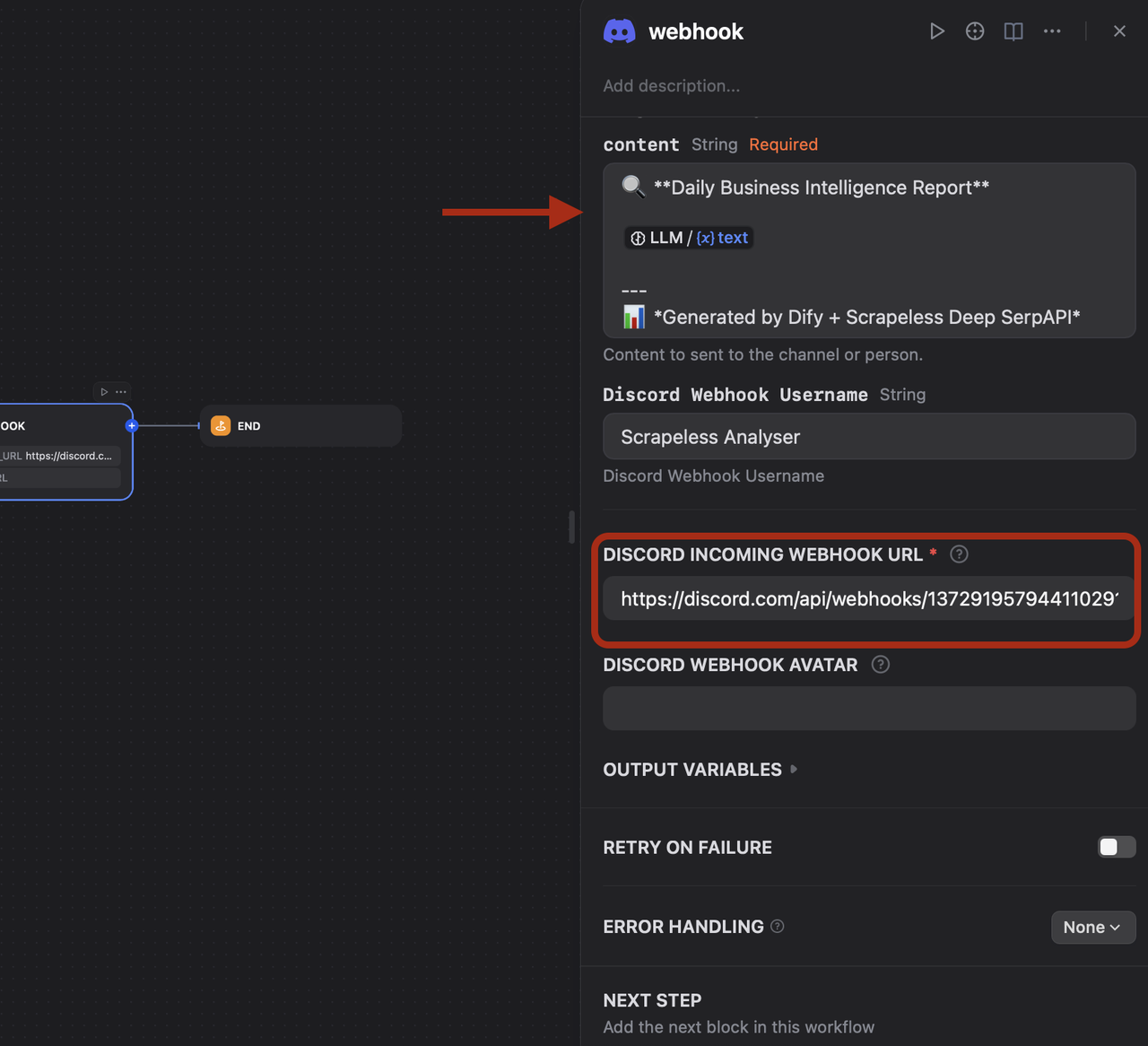

- Configure Your Webhook:

- Select the Discord Webhook tool

- Enter your Discord Webhook URL (you can obtain this from your Discord server settings)

- Customize the message format to include the analysis results

- Use variables from previous steps to include dynamic content

- Message Customization:

- Include the search query in the notification

- Add a summary of key findings

- Format the message for easy reading in Discord

🔍 **Daily Business Intelligence Report**

/ context

---

📊 *Generated by Dify + Scrapeless Deep SerpAPI*Note: You can use any webhook service of your choice (Slack, Microsoft Teams, etc.) by following the same process and searching for the appropriate tool in the marketplace.

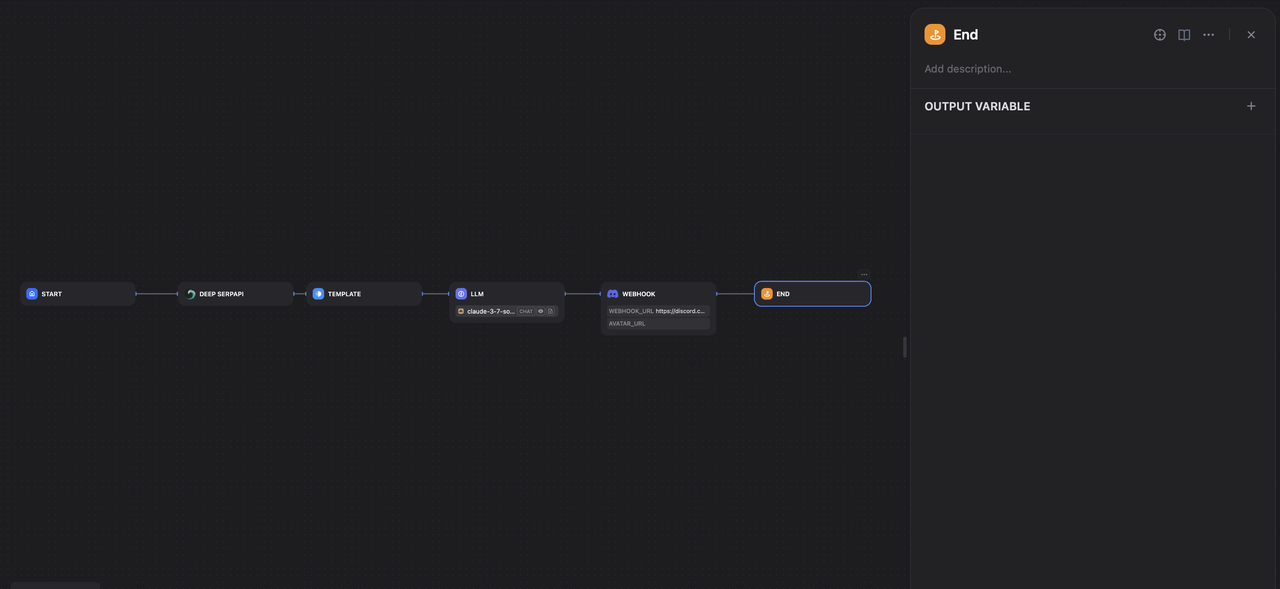

Step 7: Add an end node to complete the workflow configuration

To properly complete your workflow, add an End block:

1. Add Final Block:

- Click the "+" button after your webhook step (or LLM step if you skipped the webhook)

- Select "End" from the block menu

2. Configure End Block:

- The End block marks the completion of your workflow

- You can optionally configure output variables that will be returned when the workflow completes

- This is useful if you want to use this workflow as part of a larger automation

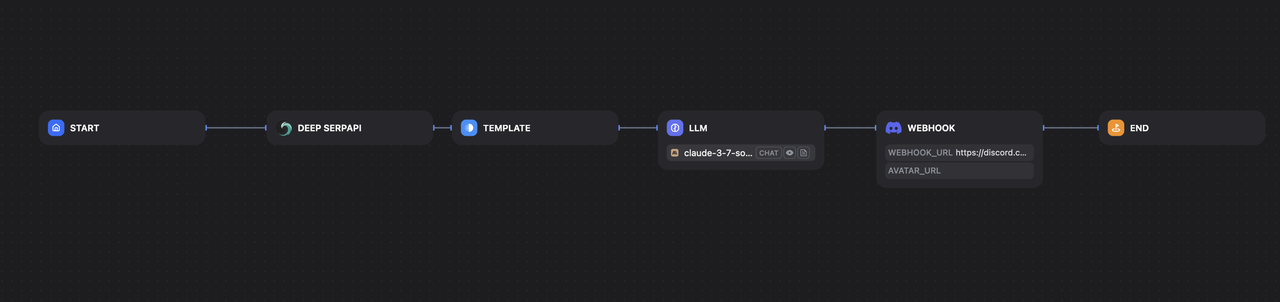

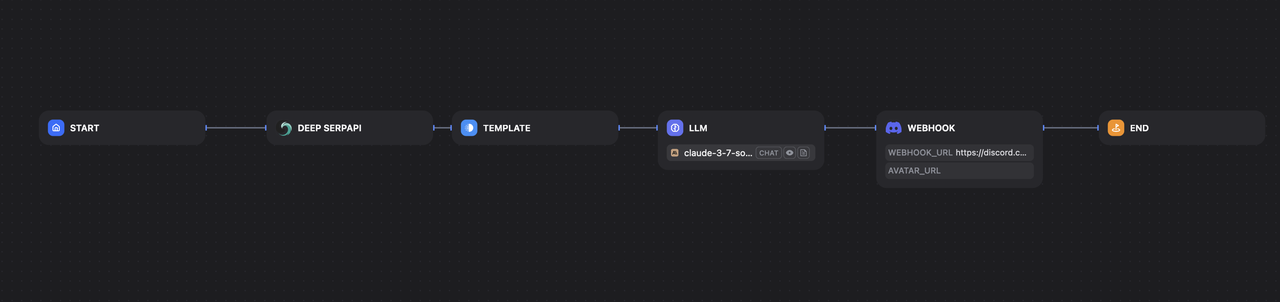

Your complete workflow should now look like:

Step 8: Output the results

🚀 Ready to Power Your Intelligence Workflows?

Sign up for Scrapeless Google SERP API today and instantly receive 2,500 free API calls — no credit card required.

Experience real-time, structured search data built for scale, precision, and AI-native workflows.👉 Get Started for Free and supercharge your next project!

Workflow Demo

To help you better understand how this smart business news monitoring workflow runs from start to finish, we’ve created a short GIF demo. It shows each step in action — from fetching real-time search results with Deep SerpApi, formatting them with a Template block, analyzing the data using an LLM, and finally sending the insights via Discord webhook.

5. Success Stories & Performance Impact

Leading Financial Institution

“From Reactive to Proactive” — Real-Time News Monitoring with 95% Accuracy

A major financial institution faced challenges in monitoring fast-moving news cycles related to banking regulations, reputational risks, and macroeconomic events. Prior to deploying the system, their compliance and risk teams relied heavily on manual media tracking, which was time-consuming and often delayed critical responses.

After integrating the Dify + Scrapeless monitoring system:

- News detection latency was reduced by 80%, enabling near real-time awareness of regulatory or reputational risks.

- The accuracy of sentiment-based alerting models improved to 95%, thanks to high-quality structured SERP data feeding AI classifiers.

- Cross-departmental collaboration improved, as alerts were pushed directly into internal Slack channels and BI dashboards.

- Result: Risk mitigation windows were shortened from hours to minutes, reducing potential damage from negative press or misinformation.

Global Manufacturing Enterprise

“Global Eyes, Local Insights” — Multi-language Market Intelligence at Scale

This multinational manufacturing firm needed to monitor global news across diverse markets to inform its supply chain strategy, trade risk exposure, and competitor activity—especially across Europe, Southeast Asia, and Latin America.

With the integrated solution in place:

- Automated SERP-based monitoring covered 20+ languages and 100+ country-specific domains, reducing blind spots in non-English media.

- Alerts about policy shifts, environmental incidents, or labor disputes were surfaced up to 72 hours earlier than previous manual workflows.

- Internal dashboards consolidated insights across time zones and teams, allowing senior decision-makers to act faster on global disruptions.

- Result: Strategic responsiveness improved significantly, particularly in procurement and logistics planning.

🔧 Want to Build More Intelligent Workflows?

If you're looking to take your data monitoring system to the next level, don’t miss these in-depth guides:

📈 Build an Intelligent Trend Monitoring System with Make

Learn how to combine Scrapeless with Make to create automated trend alerts and real-time dashboards.🌍 Build a Google Trends Monitor with Pipedream

Discover how to set up a scalable trend tracking system using Google Trends API and Pipedream workflows.Explore these tutorials and start building smarter, faster, and more automated intelligence pipelines today!

6. FAQs & Best Practices

| Issue | Recommended Solution |

|---|---|

| No search results | Check your API token validity and permissions |

| Inaccurate search results | Refine keywords and exclude irrelevant search terms |

| AI analysis is not accurate | Improve the prompt to clarify the main focus of the analysis |

| API quota exceeded or errors | Monitor usage frequency and plan API calls accordingly |

7. Summary

This solution leverages the deep integration between the Dify intelligent workflow platform and Scrapeless Deep SerpApi to enable automated monitoring and intelligent analysis of enterprise-level business news. With this system, companies can stay informed of brand developments in real time, gain insights into industry trends, respond quickly to market changes, and empower decision-makers to strategically plan for the future.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.