How to Build Intelligent Search Analytics with Scrapeless and Google Gemini

Advanced Data Extraction Specialist

In this tutorial, we'll build a powerful search analytics system by combining Scrapeless's web scraping capabilities with Google Gemini's AI analysis. You'll learn how to extract Google search data and generate actionable insights automatically.

Prerequisites

- Python 3.8+

- Scrapeless API Key

- Google Gemini API key

- Basic Python knowledge

Step 1: Setting Up Your Environment

1. Creating a Python Virtual Environment

Before installing packages, it's recommended to create a virtual environment to isolate your project dependencies:

On Windows:

# Create virtual environment

python -m venv scrapeless-gemini-env

# Activate the environment

scrapeless-gemini-env\Scripts\activateOn macOS:

# Create virtual environment

python3 -m venv scrapeless-gemini-env

# Activate the environment

source scrapeless-gemini-env/bin/activateOn Linux:

# Create virtual environment

python3 -m venv scrapeless-gemini-env

# Activate the environment

source scrapeless-gemini-env/bin/activateNote: You'll see (scrapeless-gemini-env) in your terminal prompt when the virtual environment is active.

2. Installing Required Packages

Once your virtual environment is activated, install the required packages:

pip install requests google-generativeai python-dotenv pandas3. Environment Configuration

Create a .env file in your project directory for your API keys:

SCRAPELESS_API_TOKEN=your_token_here

GEMINI_API_KEY=your_gemini_key_here4. Getting Your API Keys

For Gemini API Key: (AIzaSyBGhCVNdBsVVHlRNLEPEADGVQeKmDvDEfI)

Visit Google AI Studio to generate your free Gemini API key. Simply sign in with your Google account and create a new API key.

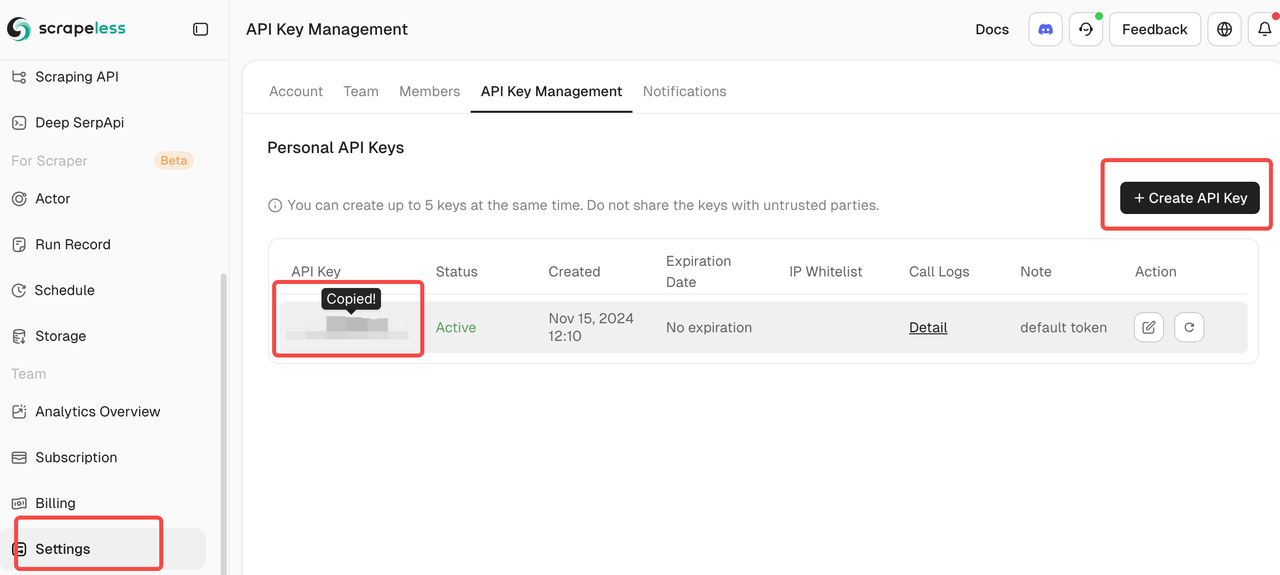

For Scrapeless API Token:

Sign up at Scrapeless to get your API token. The platform offers a generous free tier perfect for getting started with SERP data collection.

Step 2: Create the Scrapeless Client

Let's build a simple client to interact with Scrapeless's Google Search API:

import json

import requests

import os

from dotenv import load_dotenv

load_dotenv()

class ScrapelessClient:

def __init__(self):

self.token = os.getenv('SCRAPELESS_API_TOKEN')

self.host = "api.scrapeless.com"

self.url = f"https://{self.host}/api/v1/scraper/request"

self.headers = {"x-api-token": self.token}

def search_google(self, query, **kwargs):

"""Perform a Google search using Scrapeless"""

payload = {

"actor": "scraper.google.search",

"input": {

"q": query,

"gl": kwargs.get("gl", "us"),

"hl": kwargs.get("hl", "en"),

"google_domain": kwargs.get("google_domain", "google.com"),

"location": kwargs.get("location", ""),

"tbs": kwargs.get("tbs", ""),

"start": str(kwargs.get("start", 0)),

"num": str(kwargs.get("num", 10))

}

}

response = requests.post(

self.url,

headers=self.headers,

data=json.dumps(payload)

)

if response.status_code != 200:

print(f"Error: {response.status_code} - {response.text}")

return None

return response.json()Step 3: Integrate Google Gemini for Analysis

Now let's add AI-powered analysis to our search results:

import google.generativeai as genai

class SearchAnalyzer:

def __init__(self):

genai.configure(api_key=os.getenv('GEMINI_API_KEY'))

self.model = genai.GenerativeModel('gemini-1.5-flash')

self.scraper = ScrapelessClient()

def analyze_topic(self, topic):

"""Search and analyze a topic"""

# Step 1: Get search results

print(f"Searching for: {topic}")

search_results = self.scraper.search_google(topic, num=20)

if not search_results:

return None

# Step 2: Extract key information

extracted_data = self._extract_results(search_results)

# Step 3: Analyze with Gemini

prompt = f"""

Analyze these search results about "{topic}":

{json.dumps(extracted_data, indent=2)}

Provide:

1. Key themes and trends

2. Main sources of information

3. Notable insights

4. Recommended actions

Format your response as a clear, actionable report.

"""

response = self.model.generate_content(prompt)

return {

"topic": topic,

"search_data": extracted_data,

"analysis": response.text

}

def _extract_results(self, search_data):

"""Extract relevant data from search results"""

results = []

if "organic_results" in search_data:

for item in search_data["organic_results"][:10]:

results.append({

"title": item.get("title", ""),

"snippet": item.get("snippet", ""),

"link": item.get("link", ""),

"source": item.get("displayed_link", "")

})

return resultsStep 4: Build Practical Use Cases

Use Case 1: Competitor Analysis

Important: Before running the competitor analysis, make sure to replace the example company names with your actual company and competitors in the code below.

class CompetitorMonitor:

def __init__(self):

self.analyzer = SearchAnalyzer()

def analyze_competitors(self, company_name, competitors):

"""Analyze a company against its competitors"""

all_data = {}

# Search for each company

for comp in [company_name] + competitors:

print(f"\nAnalyzing: {comp}")

# Search for recent news and updates

news_query = f"{comp} latest news updates 2025"

data = self.analyzer.analyze_topic(news_query)

if data:

all_data[comp] = data

# Generate comparative analysis

comparative_prompt = f"""

Compare {company_name} with competitors based on this data:

{json.dumps(all_data, indent=2)}

Provide:

1. Competitive positioning

2. Unique strengths of each company

3. Market opportunities

4. Strategic recommendations for {company_name}

"""

response = self.analyzer.model.generate_content(comparative_prompt)

return {

"company": company_name,

"competitors": competitors,

"analysis": response.text,

"raw_data": all_data

}Use Case 2: Trend Monitoring

class TrendMonitor:

def __init__(self):

self.analyzer = SearchAnalyzer()

self.scraper = ScrapelessClient()

def monitor_trend(self, keyword, time_range="d"):

"""Monitor trends for a keyword"""

# Map time ranges to Google's tbs parameter

time_map = {

"h": "qdr:h", # Past hour

"d": "qdr:d", # Past day

"w": "qdr:w", # Past week

"m": "qdr:m" # Past month

}

# Search with time filter

results = self.scraper.search_google(

keyword,

tbs=time_map.get(time_range, "qdr:d"),

num=30

)

if not results:

return None

# Analyze trends

prompt = f"""

Analyze the trends for "{keyword}" based on recent search results:

{json.dumps(results.get("organic_results", [])[:15], indent=2)}

Identify:

1. Emerging patterns

2. Key developments

3. Sentiment (positive/negative/neutral)

4. Future predictions

5. Actionable insights

"""

response = self.analyzer.model.generate_content(prompt)

return {

"keyword": keyword,

"time_range": time_range,

"analysis": response.text,

"result_count": len(results.get("organic_results", []))

}Step 5: Create the Main Application

def main():

# Initialize components

competitor_monitor = CompetitorMonitor()

trend_monitor = TrendMonitor()

# Example 1: Competitor Analysis

# IMPORTANT: Replace these example companies with your actual company and competitors

print("=== COMPETITOR ANALYSIS ===")

analysis = competitor_monitor.analyze_competitors(

company_name="OpenAI", # Replace with your company name

competitors=["Anthropic", "Google AI", "Meta AI"] # Replace with your competitors

)

print("\nCompetitive Analysis Results:")

print(analysis["analysis"])

# Save results

with open("competitor_analysis.json", "w") as f:

json.dump(analysis, f, indent=2)

# Example 2: Trend Monitoring

# IMPORTANT: Replace the keyword with your industry-specific terms

print("\n\n=== TREND MONITORING ===")

trends = trend_monitor.monitor_trend(

keyword="artificial intelligence regulation", # Replace with your keywords

time_range="w" # Past week

)

print("\nTrend Analysis Results:")

print(trends["analysis"])

# Example 3: Multi-topic Analysis

# IMPORTANT: Replace these topics with your industry-specific topics

print("\n\n=== MULTI-TOPIC ANALYSIS ===")

topics = [

"generative AI business applications", # Replace with your topics

"AI cybersecurity threats",

"machine learning healthcare"

]

analyzer = SearchAnalyzer()

for topic in topics:

print(f"\nAnalyzing: {topic}")

result = analyzer.analyze_topic(topic)

if result:

# Save individual analysis

filename = f"{topic.replace(' ', '_')}_analysis.txt"

with open(filename, "w") as f:

f.write(result["analysis"])

print(f"Analysis saved to {filename}")

if __name__ == "__main__":

main()Step 6: Add Data Export Functionality

import pandas as pd

from datetime import datetime

class DataExporter:

@staticmethod

def export_to_csv(data, filename=None):

"""Export search results to CSV"""

if filename is None:

filename = f"search_results_{datetime.now().strftime('%Y%m%d_%H%M%S')}.csv"

# Flatten the data structure

rows = []

for item in data:

if isinstance(item, dict) and "search_data" in item:

for result in item["search_data"]:

rows.append({

"topic": item.get("topic", ""),

"title": result.get("title", ""),

"snippet": result.get("snippet", ""),

"link": result.get("link", ""),

"source": result.get("source", "")

})

df = pd.DataFrame(rows)

df.to_csv(filename, index=False)

print(f"Data exported to {filename}")

return filename

@staticmethod

def create_html_report(analysis_data, filename="report.html"):

"""Create an HTML report from analysis data"""

html = f"""

<!DOCTYPE html>

<html>

<head>

<title>Search Analytics Report</title>

<style>

body {{ font-family: Arial, sans-serif; margin: 40px; }}

.section {{ margin-bottom: 30px; padding: 20px;

background-color: #f5f5f5; border-radius: 8px; }}

h1 {{ color: #333; }}

h2 {{ color: #666; }}

pre {{ white-space: pre-wrap; word-wrap: break-word; }}

</style>

</head>

<body>

<h1>Search Analytics Report</h1>

<p>Generated: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}</p>

<div class="section">

<h2>Analysis Results</h2>

<pre>{analysis_data.get('analysis', 'No analysis available')}</pre>

</div>

<div class="section">

<h2>Data Summary</h2>

<p>Topic: {analysis_data.get('topic', 'N/A')}</p>

<p>Results analyzed: {len(analysis_data.get('search_data', []))}</p>

</div>

</body>

</html>

"""

with open(filename, 'w') as f:

f.write(html)

print(f"HTML report created: {filename}")Complete Working Code

Here's the complete, integrated code that you can run immediately:

import json

import requests

import os

import time

import pandas as pd

from datetime import datetime

from dotenv import load_dotenv

import google.generativeai as genai

# Load environment variables

load_dotenv()

class ScrapelessClient:

def __init__(self):

self.token = os.getenv('SCRAPELESS_API_TOKEN')

self.host = "api.scrapeless.com"

self.url = f"https://{self.host}/api/v1/scraper/request"

self.headers = {"x-api-token": self.token}

def search_google(self, query, **kwargs):

"""Perform a Google search using Scrapeless"""

payload = {

"actor": "scraper.google.search",

"input": {

"q": query,

"gl": kwargs.get("gl", "us"),

"hl": kwargs.get("hl", "en"),

"google_domain": kwargs.get("google_domain", "google.com"),

"location": kwargs.get("location", ""),

"tbs": kwargs.get("tbs", ""),

"start": str(kwargs.get("start", 0)),

"num": str(kwargs.get("num", 10))

}

}

response = requests.post(

self.url,

headers=self.headers,

data=json.dumps(payload)

)

if response.status_code != 200:

print(f"Error: {response.status_code} - {response.text}")

return None

return response.json()

class SearchAnalyzer:

def __init__(self):

genai.configure(api_key=os.getenv('GEMINI_API_KEY'))

self.model = genai.GenerativeModel('gemini-1.5-flash')

self.scraper = ScrapelessClient()

def analyze_topic(self, topic):

"""Search and analyze a topic"""

# Step 1: Get search results

print(f"Searching for: {topic}")

search_results = self.scraper.search_google(topic, num=20)

if not search_results:

return None

# Step 2: Extract key information

extracted_data = self._extract_results(search_results)

# Step 3: Analyze with Gemini

prompt = f"""

Analyze these search results about "{topic}":

{json.dumps(extracted_data, indent=2)}

Provide:

1. Key themes and trends

2. Main sources of information

3. Notable insights

4. Recommended actions

Format your response as a clear, actionable report.

"""

response = self.model.generate_content(prompt)

return {

"topic": topic,

"search_data": extracted_data,

"analysis": response.text

}

def _extract_results(self, search_data):

"""Extract relevant data from search results"""

results = []

if "organic_results" in search_data:

for item in search_data["organic_results"][:10]:

results.append({

"title": item.get("title", ""),

"snippet": item.get("snippet", ""),

"link": item.get("link", ""),

"source": item.get("displayed_link", "")

})

return results

class CompetitorMonitor:

def __init__(self):

self.analyzer = SearchAnalyzer()

def analyze_competitors(self, company_name, competitors):

"""Analyze a company against its competitors"""

all_data = {}

# Search for each company

for comp in [company_name] + competitors:

print(f"\nAnalyzing: {comp}")

# Search for recent news and updates

news_query = f"{comp} latest news updates 2025"

data = self.analyzer.analyze_topic(news_query)

if data:

all_data[comp] = data

# Add delay between requests

time.sleep(2)

# Generate comparative analysis

comparative_prompt = f"""

Compare {company_name} with competitors based on this data:

{json.dumps(all_data, indent=2)}

Provide:

1. Competitive positioning

2. Unique strengths of each company

3. Market opportunities

4. Strategic recommendations for {company_name}

"""

response = self.analyzer.model.generate_content(comparative_prompt)

return {

"company": company_name,

"competitors": competitors,

"analysis": response.text,

"raw_data": all_data

}

class TrendMonitor:

def __init__(self):

self.analyzer = SearchAnalyzer()

self.scraper = ScrapelessClient()

def monitor_trend(self, keyword, time_range="d"):

"""Monitor trends for a keyword"""

# Map time ranges to Google's tbs parameter

time_map = {

"h": "qdr:h", # Past hour

"d": "qdr:d", # Past day

"w": "qdr:w", # Past week

"m": "qdr:m" # Past month

}

# Search with time filter

results = self.scraper.search_google(

keyword,

tbs=time_map.get(time_range, "qdr:d"),

num=30

)

if not results:

return None

# Analyze trends

prompt = f"""

Analyze the trends for "{keyword}" based on recent search results:

{json.dumps(results.get("organic_results", [])[:15], indent=2)}

Identify:

1. Emerging patterns

2. Key developments

3. Sentiment (positive/negative/neutral)

4. Future predictions

5. Actionable insights

"""

response = self.analyzer.model.generate_content(prompt)

return {

"keyword": keyword,

"time_range": time_range,

"analysis": response.text,

"result_count": len(results.get("organic_results", []))

}

class DataExporter:

@staticmethod

def export_to_csv(data, filename=None):

"""Export search results to CSV"""

if filename is None:

filename = f"search_results_{datetime.now().strftime('%Y%m%d_%H%M%S')}.csv"

# Flatten the data structure

rows = []

for item in data:

if isinstance(item, dict) and "search_data" in item:

for result in item["search_data"]:

rows.append({

"topic": item.get("topic", ""),

"title": result.get("title", ""),

"snippet": result.get("snippet", ""),

"link": result.get("link", ""),

"source": result.get("source", "")

})

df = pd.DataFrame(rows)

df.to_csv(filename, index=False)

print(f"Data exported to {filename}")

return filename

@staticmethod

def create_html_report(analysis_data, filename="report.html"):

"""Create an HTML report from analysis data"""

html = f"""

<!DOCTYPE html>

<html>

<head>

<title>Search Analytics Report</title>

<style>

body {{ font-family: Arial, sans-serif; margin: 40px; }}

.section {{ margin-bottom: 30px; padding: 20px;

background-color: #f5f5f5; border-radius: 8px; }}

h1 {{ color: #333; }}

h2 {{ color: #666; }}

pre {{ white-space: pre-wrap; word-wrap: break-word; }}

</style>

</head>

<body>

<h1>Search Analytics Report</h1>

<p>Generated: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}</p>

<div class="section">

<h2>Analysis Results</h2>

<pre>{analysis_data.get('analysis', 'No analysis available')}</pre>

</div>

<div class="section">

<h2>Data Summary</h2>

<p>Topic: {analysis_data.get('topic', 'N/A')}</p>

<p>Results analyzed: {len(analysis_data.get('search_data', []))}</p>

</div>

</body>

</html>

"""

with open(filename, 'w') as f:

f.write(html)

print(f"HTML report created: {filename}")

def main():

# Initialize components

competitor_monitor = CompetitorMonitor()

trend_monitor = TrendMonitor()

exporter = DataExporter()

# Example 1: Competitor Analysis

print("=== COMPETITOR ANALYSIS ===")

analysis = competitor_monitor.analyze_competitors(

company_name="OpenAI",

competitors=["Anthropic", "Google AI", "Meta AI"]

)

print("\nCompetitive Analysis Results:")

print(analysis["analysis"])

# Save results

with open("competitor_analysis.json", "w") as f:

json.dump(analysis, f, indent=2)

# Example 2: Trend Monitoring

print("\n\n=== TREND MONITORING ===")

trends = trend_monitor.monitor_trend(

keyword="artificial intelligence regulation",

time_range="w" # Past week

)

print("\nTrend Analysis Results:")

print(trends["analysis"])

# Example 3: Multi-topic Analysis

print("\n\n=== MULTI-TOPIC ANALYSIS ===")

topics = [

"generative AI business applications",

"AI cybersecurity threats",

"machine learning healthcare"

]

analyzer = SearchAnalyzer()

all_results = []

for topic in topics:

print(f"\nAnalyzing: {topic}")

result = analyzer.analyze_topic(topic)

if result:

all_results.append(result)

# Save individual analysis

filename = f"{topic.replace(' ', '_')}_analysis.txt"

with open(filename, "w") as f:

f.write(result["analysis"])

print(f"Analysis saved to {filename}")

# Delay between requests

time.sleep(2)

# Export all results to CSV

if all_results:

csv_file = exporter.export_to_csv(all_results)

print(f"\nAll results exported to: {csv_file}")

# Create HTML report for the first result

if all_results[0]:

exporter.create_html_report(all_results[0])

if __name__ == "__main__":

main()Conclusion

Congratulations! You've successfully built a comprehensive search analytics system that harnesses the power of Scrapeless's enterprise-grade web scraping capabilities combined with Google Gemini's advanced AI analysis. This intelligent solution transforms raw search data into actionable business insights automatically.

What You've Accomplished

Through this tutorial, you've created a modular, production-ready system that includes:

- Automated Data Collection: Real-time Google search results via Scrapeless API

- AI-Powered Analysis: Intelligent insights generation using Google Gemini

- Competitor Intelligence: Comprehensive competitive landscape monitoring

- Trend Detection: Real-time market trend identification and analysis

- Multi-format Reporting: CSV exports and HTML reports for stakeholder sharing

- Scalable Architecture: Modular design for easy customization and extension

Key Benefits for Your Business

Strategic Decision Making: Transform search data into strategic insights that drive informed business decisions and competitive positioning.

Time Efficiency: Automate hours of manual research into minutes of automated analysis, freeing your team for higher-value strategic work.

Market Awareness: Stay ahead of industry trends, competitor moves, and emerging opportunities with real-time monitoring capabilities.

Cost-Effective Intelligence: Leverage enterprise-grade tools at a fraction of the cost of traditional market research solutions.

Extending Your System

The modular architecture makes it easy to extend functionality:

- Additional Data Sources: Integrate social media APIs, news feeds, or industry databases

- Advanced Analytics: Add sentiment analysis, entity recognition, or predictive modeling

- Visualization: Create interactive dashboards using tools like Streamlit or Dash

- Alerting: Implement real-time notifications for critical market changes

- Multi-language Support: Expand monitoring to global markets with localized search

Best Practices for Success

- Start Small: Begin with focused keywords and gradually expand your monitoring scope

- Iterate Prompts: Continuously refine your AI prompts based on output quality

- Validate Results: Cross-reference AI insights with manual verification initially

- Regular Updates: Keep your competitor lists and keywords current

- Stakeholder Feedback: Gather input from end-users to improve report relevance

Final Thoughts

This search analytics system represents a significant step toward data-driven business intelligence. By combining Scrapeless's reliable data collection with Gemini's analytical capabilities, you've created a powerful tool that can adapt to virtually any industry or use case.

The investment in building this system will pay dividends through improved market awareness, competitive intelligence, and strategic decision-making capabilities. As you continue to refine and expand the system, you'll discover new opportunities to leverage search data for business growth.

Remember that successful implementation depends not just on the technology, but on how well you integrate these insights into your business processes and decision-making workflows.

For additional resources, advanced features, and API documentation, visit Scrapeless Documentation.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.